Buterin’s latest long article: Should the Ethereum protocol encapsulate more functions?

Original title:Should Ethereum be okay with enshrining more things in the protocol?

Original author: Vitalik Buterin

Odaily Translator - Nian Yin Si Tang

Special thanks to Justin Drake, Tina Zhen and Yoav Weiss for their feedback and reviews.

From the beginning of the Ethereum project, there has been a strong philosophy of trying to make core Ethereum as simple as possible, and to achieve this as much as possible by building protocols on top of it. In the blockchain space, the “build on L1” vs. “focus on L2” debate is often thought of as primarily about scaling, but in fact, there are similar issues in meeting the needs of multiple Ethereum users: digital assets Exchange, privacy, usernames, advanced encryption, account security, censorship resistance, front-running protection, and more. However, there have been some recent cautious attempts to enshrine more of these features into the core Ethereum protocol.

This article will delve into some of the philosophical reasoning behind the original philosophy of minimal encapsulation, as well as some recent ways of thinking about these ideas. The goal will be to start building a framework to better identify possible goals where encapsulating certain functionality might be worth considering.

An early philosophy on protocol minimalism

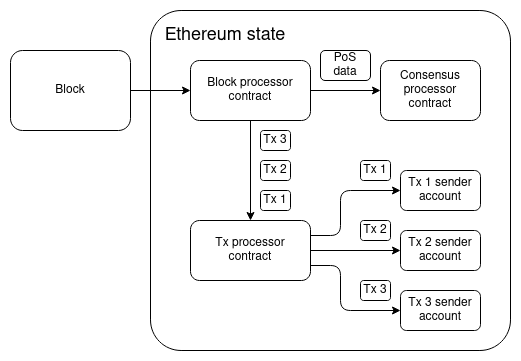

In the early history of what was then known as Ethereum 2.0, there was a strong desire to create a clean, simple, and beautiful protocol that tried as little as possible to build upon itself and left almost all such work to the users . Ideally, the protocol is just a virtual machine, and validating a block is just a virtual machine call.

This is an approximate reconstruction of the whiteboard diagram Gavin Wood and I drew in early 2015 when I was talking about what Ethereum 2.0 would look like.

The state transition function (the function that handles the block) will be just a single VM call, and all other logic will happen through contracts: some system-level contracts, but mostly user-provided contracts. A very nice feature of this model is that even an entire hard fork can be described as a single transaction to the block processor contract, which will be approved by off-chain or on-chain governance and then upgraded permission to run.

These discussions in 2015 apply particularly to two areas we consider:Account abstraction and scaling. In the case of scaling, the idea is to try to create a maximum abstract form of scaling that feels like a natural extension of the diagram above. Contracts can call data that is not stored by most Ethereum nodes, and the protocol will detect this and resolve the call via some very general extended computing functionality. From the virtual machines perspective, the call will go into some separate subsystem and then magically return the correct answer some time later.

We briefly explored this idea but quickly abandoned it because we were too focused on proving that any kind of blockchain scaling was possible. Although as we will see later, the combination of data availability sampling and ZK-EVM means that a possible future for Ethereum scaling actually looks very close to this vision! With the account abstraction, on the other hand, we knew from the start that some kind of implementation was possible, so research immediately started trying to make something as close as possible to the pure starting point of a transaction is just a call.

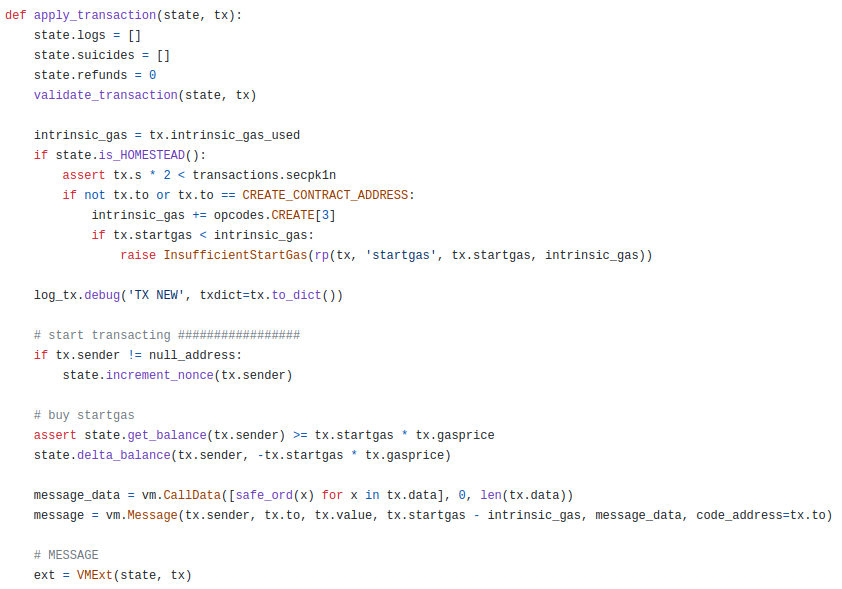

There is a lot of boilerplate code between processing the transaction and making the actual underlying EVM call from the sender address, and more boilerplate code after that. How do we reduce this code as close to zero as possible?

One of the main pieces of code here is validate_transaction(state, tx), which is responsible for checking that the nonce and signature of the transaction are correct. The real goal of account abstraction from the beginning has been to allow users to replace basic non-incremental verification and ECDSA verification with their own verification logic, so that users can more easily use features such as social recovery and multi-signature wallets. So finding a way to restructure apply_transaction into a simple EVM call is not just a make code clean for the sake of making code clean task; rather, its about moving the logic into the users account code, Give users the flexibility they need.

However, insisting that apply_transaction contain as little fixed logic as possible ended up creating a lot of challenges. We can look at one of the earliest account abstraction proposals, EIP-86.

If EIP-86 were included as-is, it would reduce the complexity of the EVM at the cost of massively increasing the complexity of other parts of the Ethereum stack, requiring essentially the same code to be written elsewhere, in addition to introducing a whole new class of weirdness. For example, the same transaction with the same hash value may appear multiple times in the chain, not to mention the multiple invalidation problem.

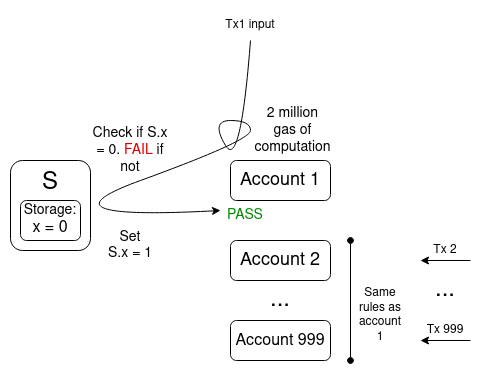

Multiple invalidation problems in account abstraction. One transaction included on-chain could invalidate thousands of other transactions in the mempool, making it easy for the mempool to become cheaply filled.

Since then, the account abstraction has evolved in stages. EIP-86 later became EIP-208 and eventually the practical EIP-2938.

However, EIP-2938 is anything but minimalist. Its contents include:

New transaction type

Three new transaction scope global variables

Two new opcodes, including the very clunky PAYgas opcode to handle gas price and gas limit checks, as EVM execution breakpoints, and to temporarily store ETH for one-time payment of fees

A complex set of mining and rebroadcasting strategies, including a list of prohibited opcodes during the transaction verification phase

In an effort to achieve account abstraction without involving Ethereum core developers (who were focused on optimizing Ethereum clients and implementing merges), EIP-2938 was ultimately re-architected as ERC-4337, which was entirely off-protocol.

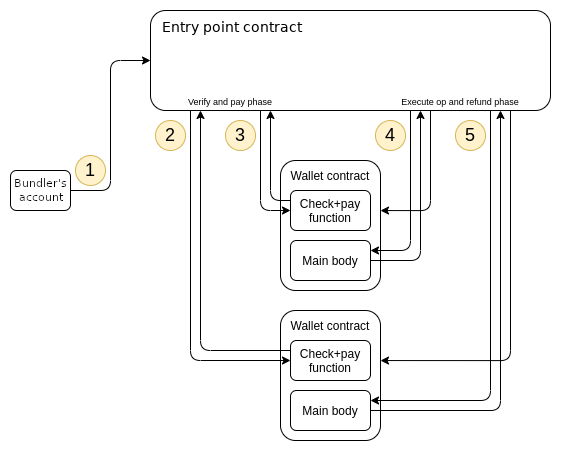

ERC-4337. It does rely entirely on EVM calls!

Because this is an ERC, it does not require a hard fork and technically exists outside the Ethereum protocol. So...problem solved? It turns out not to be the case. The current mid-term roadmap for ERC-4337 actually involves eventually transitioning large parts of ERC-4337 into a series of protocol features, which is a useful example of guidance as to why this path should be considered.

Package ERC-4337

There are several key reasons discussed for eventually bringing ERC-4337 back into the protocol:

gas efficiency: Any operation performed inside the EVM incurs some degree of virtual machine overhead, including inefficiencies when using gas-expensive features such as storage slots. Currently, these additional inefficiencies add up to at least 20,000 gas, if not more. Putting these components into the protocol is the easiest way to eliminate these problems.

Code bug risk: If the ERC-4337 “entry point contract” had a scary enough bug, all ERC-4337 compatible wallets could see all their funds dry up. Replacing contracts with in-protocol functionality creates an implicit obligation to fix code bugs through hard forks, thereby removing the risk of users running out of funds.

Support EVM opcodes, such as txt.origin. ERC-4337 itself causes txt.origin to return the address of a bundler that packages a set of user actions into a transaction. The native account abstraction solves this problem by making txt.origin point to the actual account sending the transaction, making it behave the same as EOA.

censorship-resistant: One of the challenges with the proposer/builder separation is that it becomes easier to review individual transactions. In a world where the Ethereum protocol can identify single transactions, this problem can be greatly alleviated by inclusion lists, which allow proposers to specify a list of transactions that must be included in the next two slots in almost all cases. However, ERC-4337 outside the protocol encapsulates user operations in a single transaction, making user operations opaque to the Ethereum protocol; therefore, the inclusion list provided by the Ethereum protocol will not be able to provide censorship resistance for ERC-4337 user operations. Wrapping ERC-4337 and making the user action a proper transaction type would solve this problem.

It’s worth mentioning that in its current form, ERC-4337 is significantly more expensive than “basic” Ethereum transactions: a transaction costs 21,000 gas, while ERC-4337 costs around 42,000 gas.

In theory, it should be possible to adjust the EVM gas cost system until the in-protocol cost and the cost of accessing storage outside the protocol match; there is no reason to need to spend 9000 gas to transfer ETH when other types of storage editing operations are cheaper. In fact, two EIPs related to the upcoming Verkle tree transformation actually attempt to do just that. But even if we do that, theres an obvious reason why no matter how efficient the EVM becomes, encapsulated protocol functions will inevitably be much cheaper than EVM code: encapsulated code doesnt need to pay gas for preloading.

A fully functional ERC-4337 wallet is large, with this implementation compiled and put on-chain taking up about 12,800 bytes. Of course, you could deploy this code once and use DELEGATECALL to allow each individual wallet to call it, but you would still need to access the code in every block where it is used. Under the Verkle tree gas cost EIP, 12,800 bytes will form 413 chunks. Accessing these chunks will require paying 2 times the witness branch_cost (a total of 3,800 gas) and 413 times the witness chunk_cost (a total of 82,600 gas). . That doesn’t even begin to mention the ERC-4337 entry point itself, which in version 0.6.0 has 23,689 bytes on-chain (approximately 158,700 gas to load according to Verkle tree EIP rules).

This leads to a problem: the gas cost of actually accessing these codes must be amortized across transactions in some way. The current approach used by ERC-4337 is not very good: the first transaction in the bundle consumes a one-time storage/code read cost, making it much more expensive than other transactions. Intra-protocol encapsulation will allow these public shared libraries to become part of the protocol and freely accessible to everyone.

What can we learn from this example, and when should encapsulation be practiced more generally?

In this example we see some different rationales for encapsulating account abstractions in protocols.

Market-based approaches that “push complexity to the edge” are most likely to fail when fixed costs are high.In fact, the long-term account abstraction roadmap looks to have a lot of fixed costs per block. 244,100 gas for loading standardized wallet code is one thing; but aggregation can add hundreds of thousands of gas for ZK-SNARK verification, as well as the on-chain cost of proof verification. There is no way to charge users for these costs without introducing massive market inefficiencies, and turning some of these features into protocol features that are freely accessible to everyone would solve this problem very well.

Community-wide response to code bugs.If some piece of code is used by all users or a very broad range of users, it often makes more sense for the blockchain community to take on the responsibility of a hard fork to fix any bugs that arise. ERC-4337 introduces a large amount of globally shared code, and in the long run, it is undoubtedly more reasonable to fix bugs in the code through a hard fork than to cause users to lose a large amount of ETH.

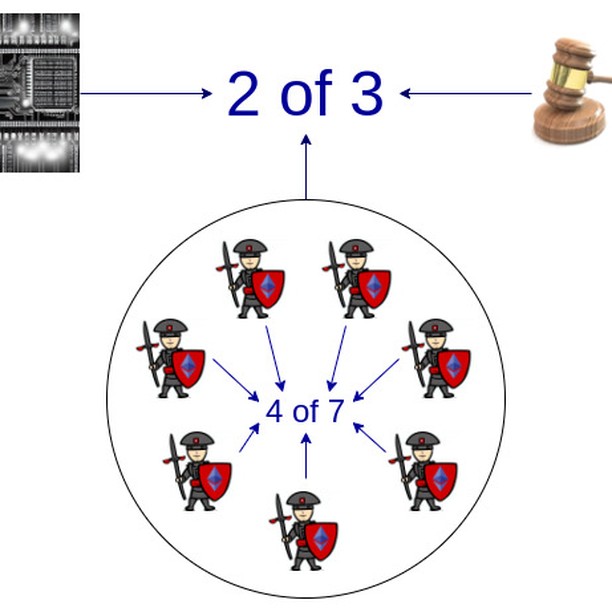

Sometimes, a stronger form of the protocol can be implemented by directly leveraging its functionality.The key example here is censorship-resistant features within the protocol, such as include lists: In-protocol include lists can guarantee censorship resistance better than out-of-protocol methods. In order for user-level operations to truly benefit from in-protocol include lists, individual users Level operations require the protocol to be legible. Another lesser-known example is that the 2017-era Ethereum proof-of-stake design had account abstraction of stake keys, which was abandoned in favor of encapsulated BLS because BLS supported an aggregation mechanism that had to be Implemented at the protocol and network levels, this can make processing large numbers of signatures more efficient.

But its important to remember,Compared with the current situation, even the account abstraction within the encapsulation protocol is still a huge de-encapsulation. Today, top-level Ethereum transactions can only be initiated from externally owned accounts (EOA), which are verified using a single secp 256k 1 elliptic curve signature. Account abstraction eliminates this and leaves validation conditions to the user to define. Therefore, in this story about account abstraction, we also see the biggest reason against encapsulation:Flexibility to meet the needs of different users。

Lets flesh out the story further by looking at a few other examples of features that have recently been considered for encapsulation. We will pay special attention to:ZK-EVM, proposer-builder separation, private memory pool, liquidity staking and new precompilation。

Package ZK-EVM

Let’s turn our attention to another potential encapsulation target for the Ethereum protocol: ZK-EVM. Currently, we have a large number of ZK-rollups that all have to write fairly similar code to verify the execution of similar Ethereum blocks in ZK-SNARKs. There is a fairly diverse ecosystem of independent implementations: PSE ZK-EVM, Kakarot, Polygon ZK-EVM, Linea, Zeth, and many more.

A recent controversy in the EVM ZK-rollup world has to do with how to handle bugs that may arise in ZK code. Currently, all of these systems in operation have some form of security council mechanism that controls the attestation system in the event of a bug. Last year, I tried to create a standardized framework that would encourage projects to clarify how much they trust the attestation system, and how much they trust the Security Council, and gradually reduce their authority over that organization over time.

In the medium term, rollup is likely to rely on multiple certification systems, with the Security Council having power only in extreme cases where two different certification systems diverge.

However, there is a sense that some of this work feels redundant. We already have an Ethereum base layer, it has an EVM, and we already have a working mechanism for dealing with bugs in the implementation: if there is a bug, the client will be updated to fix it, and the chain will continue to function. From the perspective of the buggy client, it appears that blocks that have been finalized will no longer be confirmed, but at least we wont see users losing funds. Likewise, if rollups just want to maintain the equivalent role of EVM, then they need to implement their own governance to constantly change their internal ZK-EVM rules to match upgrades to the Ethereum base layer, which feels wrong because ultimately they are Built on top of the Ethereum base layer itself, it knows when to upgrade and according to what new rules.

Since these L2 ZK-EVMs basically use the exact same EVM as Ethereum, can we somehow incorporate verify EVM execution in ZK into the protocol functionality and handle exceptions by applying Ethereums social consensus , like bugs and upgrades, like we already do for the base layer EVM execution itself?

This is an important and challenging topic.

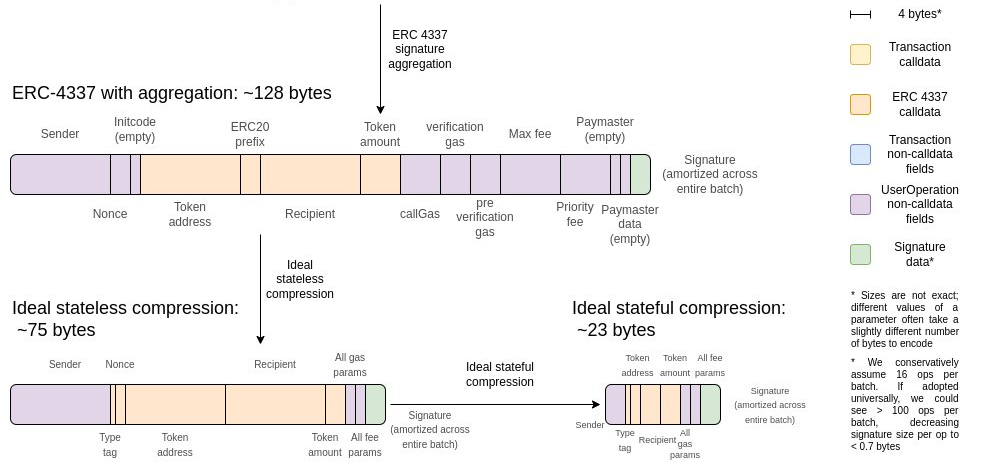

One possible topic of debate regarding data availability in native ZK-EVM is statefulness. ZK-EVMs would be much more data efficient if they didnt need to carry witness data. That is, if a particular piece of data has already been read or written in some previous block, we can simply assume that the prover has access to it and does not have to make it available again. Its not just about not reloading storage and code; it turns out that if a rollup compresses the data correctly, stateful compression can save up to 3x the data compared to stateless compression.

This means that for ZK-EVM precompilation we have two options:

1.Precompilation requires all data to be available in the same block. This means that the prover can be stateless, but it also means that using this precompiled ZK-rollup is much more expensive than using custom code for rollup.

2.Precompilation allows pointers to point to data used or generated by previous executions. This makes ZK-rollup close to optimal, but it is more complex and introduces a new state that must be stored by the prover.

What can we learn from this? There is a good reason to encapsulate ZK-EVM verification in some way: rollup is already building its own custom version, and Ethereum is willing to implement EVM on L1 with the weight of its multiple implementations and off-chain social consensus. , this feels wrong, but L2 doing the exact same job would have to implement a complex gadget involving the Security Council. But on the other hand, theres a big catch in the details: There are different versions of ZK-EVM, with different costs and benefits. The distinction between stateful and stateless only scratches the surface; attempts to support almost-EVM custom code that has been proven by other systems may expose a larger design space. therefore,Packaging ZK-EVM brings both promise and challenges。

Encapsulation Proposer and Builder Separation (ePBS)

The rise of MEV makes block production an economic activity at scale, with sophisticated actors able to produce blocks that generate more revenue than the default algorithm, which simply observes a mempool of transactions and contains them. So far, the Ethereum community has tried to solve this problem by using off-protocol proposer-builder separation schemes such as MEV-Boost, which allows regular validators (proposers) to push blocks to Building is outsourced to specialized actors (Builders).

However, MEV-Boost makes trust assumptions in a new class of actors called relays. Over the past two years, there have been many proposals to create a “packaged PBS”. What are the benefits of doing this? In this case, the answer is very simple: a PBS built by using protocol features directly is more powerful (in the sense of having weaker trust assumptions) than a PBS built without using them. This is similar to the case of encapsulating price oracles within a protocol – although in this case, there are strong objections.

Encapsulate private memory pool

When a user sends a transaction, it is immediately public and visible to everyone, even before it is included on-chain. This leaves users of many applications vulnerable to economic attacks, such as front-running.

Recently, there have been a number of projects dedicated to creating “private mempools” (or “crypto mempools”), which encrypt users’ transactions until they are irreversibly accepted into a block.

The problem, however, is that such a scheme requires a special kind of encryption: To prevent users from flooding the system and decrypting it first, the encryption must be automatically decrypted after the transaction has actually been irreversibly accepted.

There are various techniques with different tradeoffs in order to implement this form of encryption. Jon Charbonneau once described it well:

Encrypting centralized operators, such as Flashbots Protect。

Time lock encryption, this encrypted form can be decrypted by anyone after certain sequential calculation steps, and cannot be parallelized;

threshold encryption, trusting an honest majority committee to decrypt the data. See the concept of closed beacon chains for specific suggestions.

Trusted hardware, such as SGX.

Unfortunately,Each encryption method has different weaknesses. While for each solution there is a segment of users willing to trust it, no solution is trusted enough for it to actually be accepted by Layer 1.So, at least until delayed encryption is perfected or some other technological breakthrough,Encapsulating anti-front-running functionality in Layer 1 seems to be a difficult proposition, even though it is a valuable enough feature that many application solutions have emerged.

Encapsulated Liquidity Staking

A common need among Ethereum DeFi users is the ability to simultaneously use their ETH for staking and as collateral in other applications. Another common request is simply for convenience: users want to be able to stake (and protect online staking keys) without the complexity of running a node and keeping it always online.

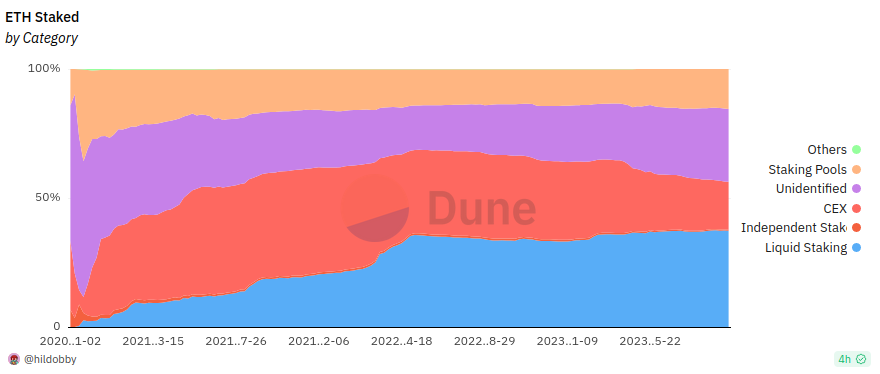

By far the simplest staking interface that meets both of these needs is just an ERC 20 token: convert your ETH into staking ETH, hold it, and then convert it back. In fact, liquidity staking providers like Lido and Rocket Pool are already doing this. However, there are some natural centralization mechanisms at play with liquidity staking: people will naturally go into staking the largest version of ETH because it is the most familiar and liquid.

Each version of staked ETH needs to have some mechanism to determine who can become the underlying node operator. It cannot be unlimited because then attackers will join in and use user funds to expand their attacks. Currently, the top two are Lido, which has DAO whitelisted node operators, and Rocket Pool, which allows anyone to run a node with a deposit of 8 ETH. The two methods have different risks: the Rocket Pool method allows an attacker to conduct a 51% attack on the network and forces users to pay most of the costs; as for the DAO method, if a certain staked token dominates, it will lead to a single , a potentially compromised governance gadget controls a large portion of all Ethereum validators. To its credit, protocols like Lido already have safeguards in place, but one layer of defense may not be enough.

In the short term, one option is to encourage ecosystem participants to use a diverse set of liquidity staking providers to reduce the possibility of a dominant player posing systemic risk. In the long term, however, this is an unstable balance, and overreliance on moral pressure to solve problems is dangerous. A natural question arises:Would it make sense to encapsulate some functionality in the protocol to make liquidity staking less centralized?

The key question here is: what kind of in-protocol functionality? One problem with simply creating a fungible staking ETH token within the protocol is that it would either have to have an encapsulated Ethereum-wide governance to choose who gets to run nodes, or be open-ended, but that would turn it into Attackers Tools.

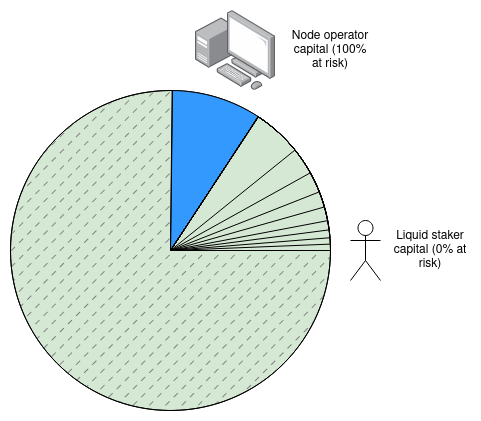

An interesting idea is Dankrad Feist’s article on maximizing liquidity staking. First, let’s bite the bullet and assume that if Ethereum is hit by a 51% attack, only 5% of the attacked ETH may be slashed. This is a reasonable trade-off; with over 26 million ETH currently staked, the cost of attacking a third of that (~8 million ETH) is excessive, especially considering how many out-of-model attacks can be Done at a lower cost. In fact, similar trade-offs have already been explored in the “super committee’s” proposal to implement single-slot finality.

If we accept that only 5% of the attack ETH is slashed, then over 90% of the staked ETH will not be affected by the slashing, so they can be used as fungible liquid staking tokens within the protocol, which can then be used by other applications.

This path is interesting. But it still leaves one question:What exactly is encapsulated?Rocket Pool works very similarly: each node operator provides some funds, and liquidity stakers provide the rest. We can simply adjust some constants to limit the maximum slashing penalty to 2 ETH, and Rocket Pool’s existing rETH will become risk-free.

With simple protocol adjustments, we can also do other clever things. For example, lets say we want a system with two tiers of staking: node operators (high collateral requirements) and depositors (no minimum collateral requirements, can join and leave at any time), but we still want to endow a Randomly sampled depositor committee powers to prevent centralization of node operators, such as recommending a list of transactions that must be included (for censorship resistance reasons), controlling fork selection during inactivity leaks, or requiring signatures on blocks. This could be accomplished in a way that essentially goes off-protocol by tweaking the protocol to require each validator to provide (i) a regular staking key, and (ii) an ETH address that can be used in each slot is called to output the secondary pledge key. The protocol would empower both keys, but the mechanism for selecting the second key in each slot could be left to the staking pool protocol. It may still be better to encapsulate some functionality directly, but it is worth noting that this design space of include some things and leave other things to the user exists.

Encapsulate more precompilation

Precompiled contracts (or precompiled contracts) are Ethereum contracts that implement complex cryptographic operations, with logic implemented natively in client code rather than EVM smart contract code. Precoding is a compromise adopted from the beginning of Ethereum development: since the overhead of a virtual machine is too great for some very complex and highly specialized code, we can implement some important applications in native code. Value critical operations to make them faster. Today, this basically includes some specific hash functions and elliptic curve operations.

There is currently a push to add precompilation for secp 256 r 1, a slightly different elliptic curve than secp 256 k 1 used for basic ethereum accounts, which is widely used as it is well supported by trusted hardware modules Use it to increase wallet security. In recent years, the community has also pushed to add precompilation for BLS-12-377, BW 6-761, generalized pairing, and other features.

The counterargument to these calls for more precompilers is that many of the precompilers added previously (such as RIPEMD and BLAKE) ended up being used far less than expected, and we should learn from this. Rather than adding more precompilation for specific operations, we should perhaps focus on a softer approach based on ideas like EVM-MAX and the Hibernate-But-Always-Resumable SIMD proposal, which would enable EVM implementations to run at lower cost The cost of executing a wide range of code classes. Perhaps even the existing little-used precompilation could be removed and replaced with an (inevitably less efficient) EVM code implementation of the same functions. That said, its still possible that there are specific cryptographic operations that are valuable enough to be sped up, so it makes sense to add them as precompiled.

What have we learned from all this?

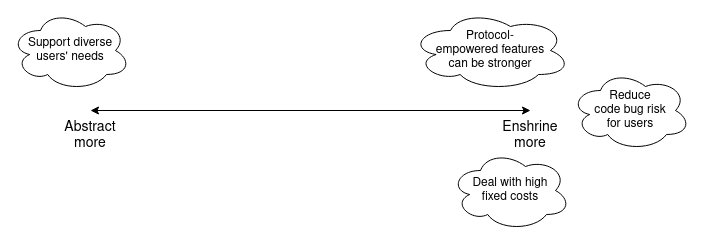

The desire to encapsulate as little as possible is understandable and good; it stems from the Unix philosophical tradition of creating minimalist software that can easily adapt to the different needs of users and avoid the curse of software bloat. However, blockchain is not a personal computing operating system, but a social system. This means that it makes sense to encapsulate certain functionality in the protocol.

In many cases, these other examples are similar to what we saw in the account abstraction. But we also learned some new lessons:

Encapsulating functionality can help avoid centralization risks in other areas of the stack:

Often, keeping the base protocol minimal and simple pushes complexity out to some ecosystem outside of the protocol. From a Unix philosophy perspective, this is fine. However, there is sometimes a risk that the off-protocol ecosystem will become centralized, usually (but not only) because of high fixed costs. Encapsulation can sometimes reduce de facto centralization.

Encapsulating too much may overextend the trust and governance burden on the protocol:

This is the topic of the previous article on Dont Overload Ethereum Consensus: if encapsulating a specific feature weakens the trust model and makes Ethereum as a whole more subjective, this weakens Ethereum. Credible neutrality. In these cases, it is better to have the specific functionality as a mechanism on top of Ethereum rather than trying to bring it into Ethereum itself. Encrypted memory pools are the best example here, which can be a bit difficult to encapsulate, at least until latency encryption improves.

Encapsulating too much may make the protocol overly complex:

Protocol complexity is a systemic risk that is increased by adding too many features to a protocol. Precompilation is the best example.

Encapsulating functionality may be counterproductive in the long term because user needs are unpredictable:

A feature that many people think is important and that will be used by many users is probably not used very often in practice.

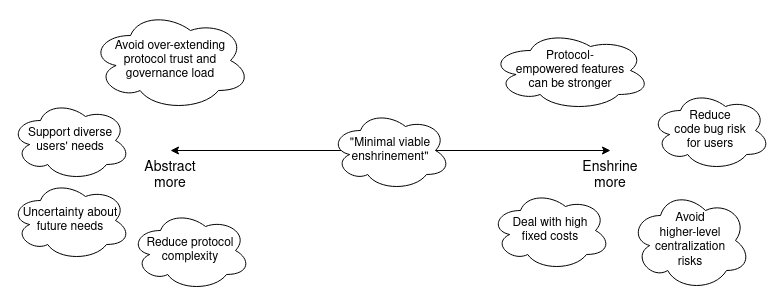

Additionally, liquidity staking, ZK-EVM, and precompiled examples show the possibility of a middle path: minimal viable enshrinement. The protocol does not need to encapsulate the entire functionality, but can contain specific parts that address key challenges, making the functionality easy to implement without being too paranoid or too narrow. Examples include:

Rather than encapsulating a complete liquidity staking system, it is better to change the staking penalty rules to make trustless liquidity staking more feasible;

Rather than encapsulating more precompilers, EVM-MAX and/or SIMD should be encapsulated to make a wider class of operations easier to implement efficiently;

Instead of encapsulating the entire concept of rollup, you can simply encapsulate EVM verification.

We can extend the previous diagram as follows:

Sometimes it makes sense to encapsulate something, and removing rarely used precompilers is one example. Account abstraction as a whole, as mentioned earlier, is also an important form of de-encapsulation. If we want to support backwards compatibility for existing users, the mechanism might actually be surprisingly similar to the one that de-wrapped precompilation: one of the proposals is EIP-5003, which would allow EOAs to convert their accounts to have the same ( or better) functional contract.

Which features should be brought into the protocol and which should be left to other layers of the ecosystem is a complex trade-off. This trade-off is expected to continue to improve over time as our understanding of user needs and the available suite of ideas and technologies continue to improve.