This article explores why the consensus layer ZKization is needed?

Original article: Zoe, Puzzle Ventures

TL; DR

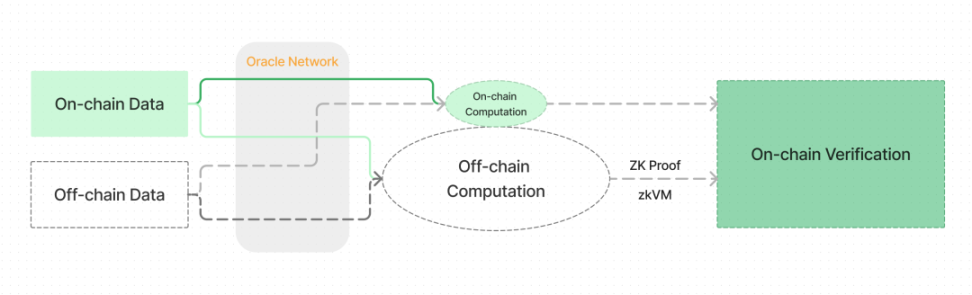

From the competition of many public chains, to Danksharding in the Ethereum roadmap, to op/zk and other second-layer solutions, we have been discussing the scalability of the blockchain without interruption—what to do if a large number of users and funds come in ? Through the next series of articles, I want to show you a future vision, which consists of three parts: data acquisition, off-chain calculation, and on-chain verification.

Trustless Data Access + Off-chain Computation + On-chain Verification

Proving consensus is an important part of this blueprint. This article explores the significance of using zero-knowledge proof consensus based on Ethereum PoS, including:

1. The importance of decentralization for EVM.

2. The importance of decentralized data access for web3 expansion.

Proving the full consensus of the Ethereum mainnet is a complex task, but if we can realize the ZK consensus layer, it will help the expansion of Ethereum on the basis of ensuring security and trust, and at the same time enhance the robustness of the entire Ethereum ecosystem. Reduce participation costs and allow more people to participate.

1. Why is it important to prove the consensus layer?

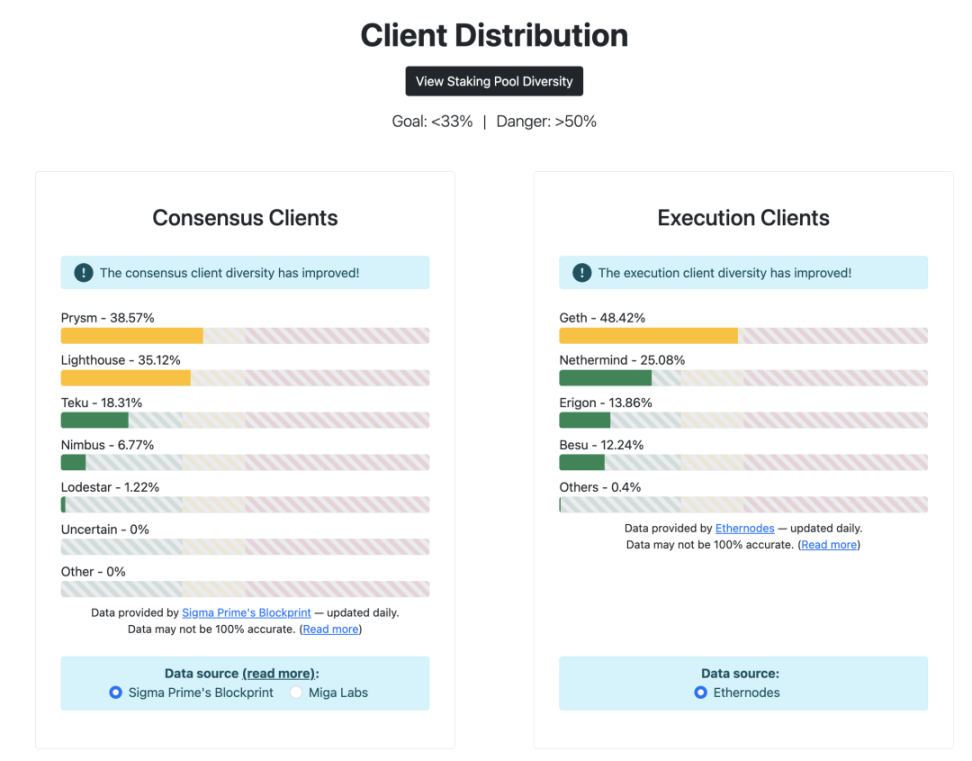

Using zk to verify the consensus layer of Ethereum L1 makes sense in two general directions. First, it can make up for the shortcomings of current node diversity and enhance the decentralization and security of Ethereum itself. Secondly, it provides a usability and security basis for protocols at all layers of the Ethereum ecosystem to face more users, including cross-chain security, trustless data access, decentralized oracles, and expansion.

1. Ethereum’s perspective

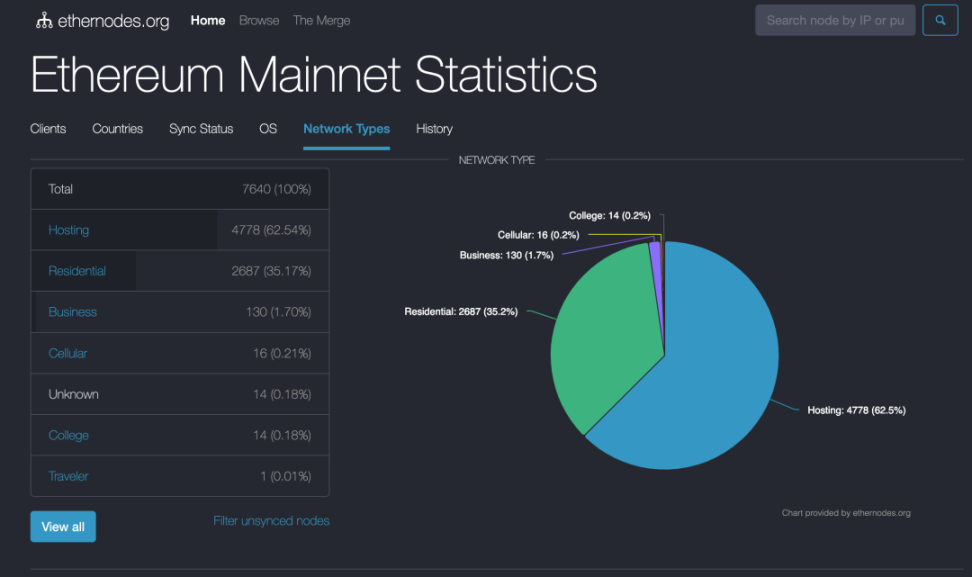

For Ethereum to achieve its decentralization and robustness, it requires an environment of client diversity. It means that more people are involved, especially ordinary users, running clients based on different code environments. However, it is unrealistic to require every user to run a full node because it requires a lot of resources. Few people can afford at least 16 GB+ RAM and Fast SSD with 2+TB, and these requirements are constantly growing.

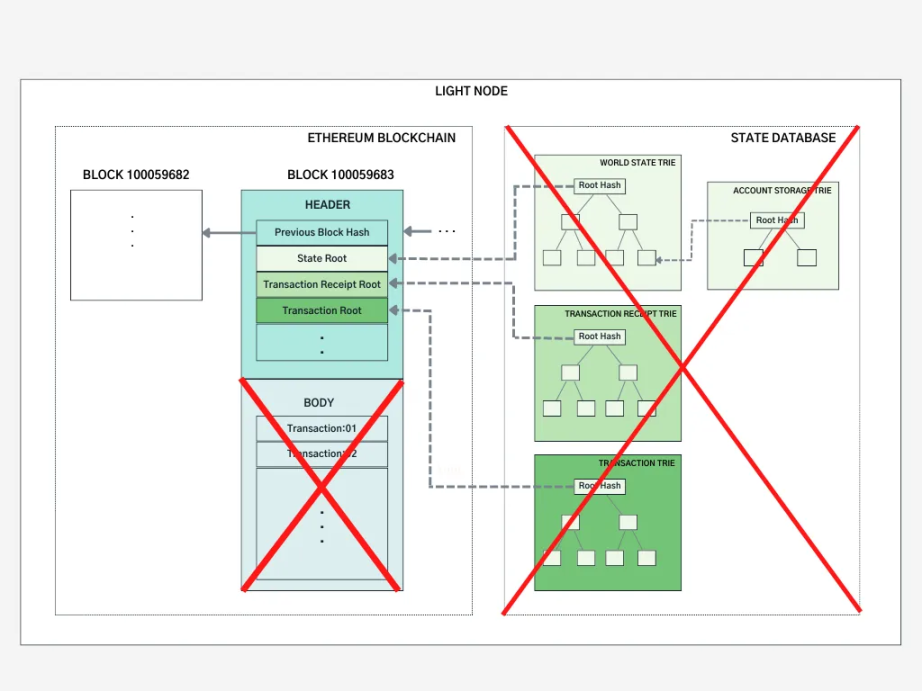

The current goal is to implement a light node, which can provide the same degree of trust as a full node (trust minimization), but also has a lower cost in terms of memory, storage and bandwidth requirements. However, currently light nodes do not participate in the consensus process, or are only partially protected by the consensus mechanism (Sync Committee).

This goal is called The Verge in Ethereums roadmap.

Goal: verifying blocks should be super easy - download N bytes of data, perform a few basic computations, verify a SNARK and you’re done— The Verge on Ethereum’s Roadmap

The Verge aims to bridge the client gap. The key step is how to implement trustless light nodes. The security level should be equivalent to todays full nodes, filling the client gap so that more people can actively participate in the decentralization of the network. ization and robustness.

https://www.ethernodes.org/network-types

https://clientdiversity.org/

2. The perspective of protocols at each layer of the Ethereum ecosystem

Starting from first principles, we need to solve the problem of combining on-chain data access with off-chain calculation verification.

The current use of on-chain data is relatively rudimentary and insufficient. In many cases, the data required for protocol adjustment is too complex to be calculated on-chain, and the cost of obtaining the data in a trustless manner is too high, requiring large amounts of historical data access and frequent number calculations.

For individual users and projects, our ideal situation is to achieve decentralized, end-to-end trustless data transmission and reading and writing. Based on this, for more users in the future, the lowest possible computing cost should be achieved. , taking into account security, usability and economy.

Specifically including the following aspects:

1. Decentralized and trustless oracles (Oracles): Current protocols use centralized oracles to avoid direct access to large amounts of historical data on the chain, increasing unnecessary trust costs and reducing composability. .

2. Data reading and writing of data and asset-sensitive protocols: For example, DeFi protocols need to dynamically adjust some parameters during operation, but whether it is possible to access historical data without trust and perform more complex calculations, such as based on recent market fluctuations Adjust AMM fees, design chain derivatives transaction price models and dynamic fluctuations, introduce machine learning methods for asset management, and adjust loan interest according to market conditions.

3. Cross-chain security: The current light node solution based on zk technology is better in terms of security, capital efficiency, statefulness and diversity of information transmission. The current Telepathy cross-chain solution of Succinct and the cross-chain solution of Polehedra on LayerZero are both based on the light node block header zk verification done by the Sync Committee. However, the Sync Committee is not the Ethereum PoS consensus layer itself, there is a certain trust assumption, and there is room to make it more complete in the future.

Currently, due to economic costs, technical limitations, and user experience considerations, developers usually rely on centralized RPC servers, such as Alchemy, Infura, and Ankr, when utilizing on-chain data.

2. Where does the blockchain data come from? Trust assumptions for different data sources

There are two sources of computing data in the blockchain: on-chain data and off-chain data. Calculations are performed corresponding to the on-chain and off-chain destinations. For example, the need to adjust DeFi protocol parameters mentioned above.

Data Access, computation, proof and verification

The reading, writing and computing of on-chain and off-chain data have two distinctive features:

1. In order to achieve decentralization and security, it is best to verify the data we obtain, that is, Dont Trust, Verify.

2. Often involves many complex and expensive calculation processes.

If no suitable technical solution is found, the above two points will affect the usability of the blockchain.

We can illustrate different data acquisition methods through a simple example. Suppose you wanted to check your account balance, what would you do?

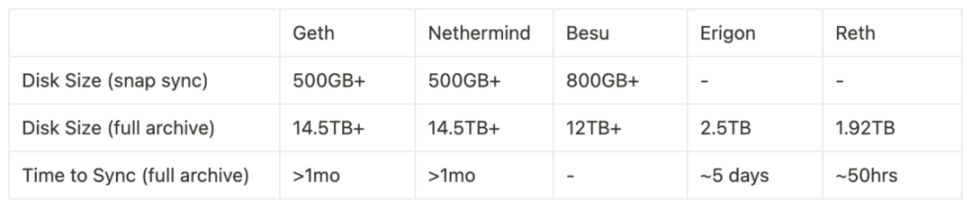

One of the safest ways is to run a full node yourself, check the locally stored Ethereum state, and get the account balance from it.

Full node Benchmark. The sync mode and client selection affect the required space requirements.

refer to:

https://ethereum.org/en/developers/docs/nodes-and-clients/run-a-node/; https://docs.google.com/presentation/d/1ZxEp6Go5XqTZxQFYTYYnzyd97JKbcXlA6O2s4RI9jr4/mobilepresent?pli=1&slide=id.g252bbdac496_0_109)

However, running a full node yourself is very expensive and requires self-maintenance. To save trouble, many people may request data directly from centralized node operators. While there is nothing wrong with this, similar to what happens in Web2, and we have never seen any malicious behavior from these providers, it also means that we have to trust a centralized service provider, which increases the overall Safety Assumptions.

To solve this problem, we can consider two solutions: one is to reduce the cost of running nodes, and the other is to find a way to verify the credibility of third-party data.

Then just store the necessary data. In order to access data more efficiently, reduce trust costs, and independently verify data, some institutions have developed light clients, such as Rust-based Helio (developed by a16z), Lodestar, Nimbus, and JavaScript-based Kevlar. The light client does not store all block data, but only downloads and stores the block header - a summary of all the information in a block. Light clients can independently verify the data they receive, so when data is obtained from a third-party data provider, you no longer need to fully trust that providers data.

https://medium.com/coinmonks/ethereum-data-transaction-trie-simplified-795483ff3929

The main features of light nodes include:

Ideally, light nodes can run on mobile phones or embedded devices.

Ideally, they would have the same functionality and security guarantees as full nodes.

However, light nodes do not participate in the consensus process, or are only protected by part of the consensus mechanism, namely the Sync Committee.

Sync Committee is the trust assumption of light nodes.

Before The Merge, starting in December 2020, Beacon Chain conducted a hard fork called Altair, whose core purpose was to provide consensus support for light nodes. Different from the PoS full consensus, this group of verifiers (512) is composed of a smaller data set, which is randomly drawn at a longer time period (256 epochs, about 27 hours).

Light clients such as Helios and Succinct are taking steps toward solving the problem, but a light client is far from a fully verifying node: a light client merely verifies the signatures of a random subset of validators called the sync committee, and does not verify that the chain actually follows the protocol rules. To bring us to a world where users can actually verify that the chain follows the rules, we would have to do something different.How will Ethereum's multi-client philosophy interact with ZK-EVMs?, by Vitalik Buterin*

This is why we want to verify the entire consensus layer of Ethereum, in order to usher in a future that is more secure, more usable, has more diverse protocols, and is adopted on a large scale. At present, the best solution is zero knowledge (zero -knowledge) technology.

3. Use zero-knowledge to prove the road to consensus layer

To build an environment that does not require trust assumptions, it is necessary to solve the problems of light node credibility, decentralized data access, and off-chain calculation verification. In these aspects, zero-knowledge proof is currently the most recognized core technology, which involves but Not limited to zkEVM, zkWASM, other zkVM, zk Co-processor and other underlying solutions.

Proving that the consensus layer is an important part of it.

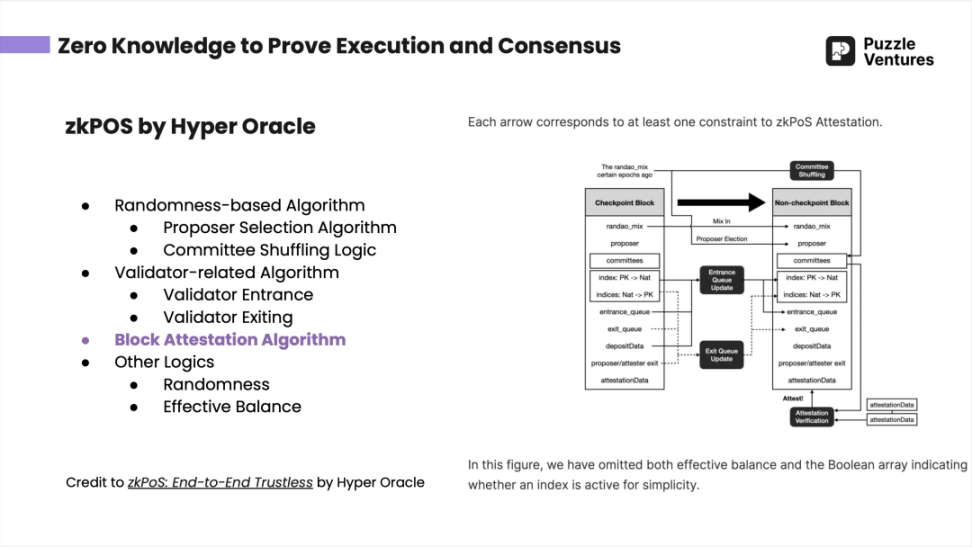

PoS algorithms are very complex, and implementing them in a ZK way requires a lot of engineering work and architectural considerations, so lets break down its components first.

1. Core steps for consensus formation in Ethereum 2.0

(1) Algorithms related to the validator

These include the following steps

Becoming a validator: A validator candidate needs to send 32 ETH to the deposit contract and wait at least 16 hours to a few days or weeks for the Beacon Chain to process and activate to become an official validator. (Refer to FAQ - Why does it take so long for a validator to be activated)

Exercising verification duties: involving random numbers and block proof algorithms.

Exit validator role: The way to exit a validator can be voluntary withdrawal or slashed for violations. The verifier can actively initiate exit at any time, and each epoch has a limit on the number of verifiers who can exit. If too many validators try to exit at the same time, they will be put into a queue where they still need to perform validating duties before being queued. After a successful exit, after 1/8 eek, the validator will be able to withdraw the pledged funds.

(2) Random number correlation algorithm

Each epoch contains 32 blocks (slots), which are randomly grouped 2 epochs in advance, and all validators are divided into 32 committees (committees), who perform duties in the current epoch and are responsible for the consensus of each block.

There are two roles in each committee, a proposer (Proposer), and the rest are block builders (Builders), which are also randomly selected. This separates the two processes of transaction ordering and block building (see proposer/builder separation - PBS for details).

(3) Block Attestation and BLS signature related algorithms

The signature part is the core part of the consensus layer.

The verification committee of each slot votes (using BLS signatures), and needs to obtain a 2/3 pass rate to build a block.

In the Ethereum PoS consensus layer, BLS signatures use BLS 12-381 elliptic curves, pairing-friendly, suitable for aggregating all signatures, reducing proof time and size.

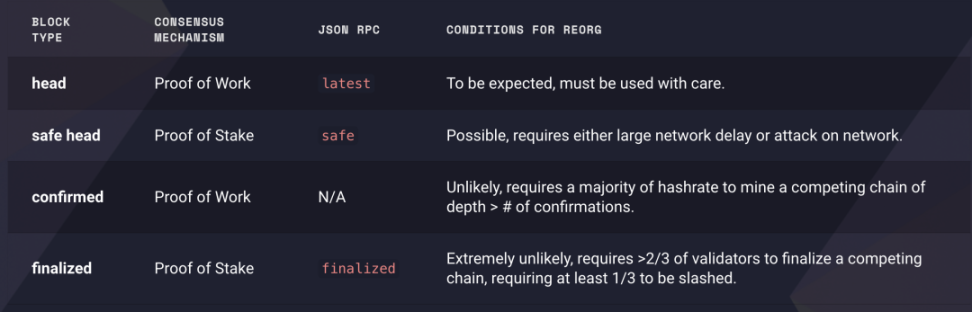

In Proof of Work, blocks may be re-orged. After the merge, the concept of finalized blocks and safe heads on the execution layer was introduced. To create a conflicting block; the attacker needs to burn at least 1/3 of the total staked Ether; largely, PoS is more reliable than PoW.

https://blog.ethereum.org/2021/11/29/how-the-merge-impacts-app-layer

At the end of June 2023, Puzzle Ventures Evening Self-study introduced Hyper Oracles zkPoS (using the zk method to verify the full consensus layer of Ethereum). See zkPoS: End-to-End Trustless for details

(4) Others: such as weak subjectivity checkpoints (weak subjectivity checkpoints)

One of the challenges faced by the trustless PoS consensus proof is that the choice of subjective checkpoint involves social consensus based on social information. These checkpoints are revert limits because blocks preceding a weak subjectivity checkpoint cannot be changed. For details, see: https://ethereum.org/en/developers/docs/consensus-mechanisms/pos/weak-subjectivity/

Checkpoints are also a point to be considered in the zkization of the consensus layer.

2. Prove the ZK technology stack of the consensus layer

In the proof consensus layer, proving signatures or other computations themselves are very expensive, but verifying zero-knowledge proofs is very cheap in comparison.

When choosing a method for using a zero-knowledge proof consensus layer, protocols need to consider the following factors:

What do you want to prove?

What is the application scenario after the proof?

How to improve the efficiency of the proof?

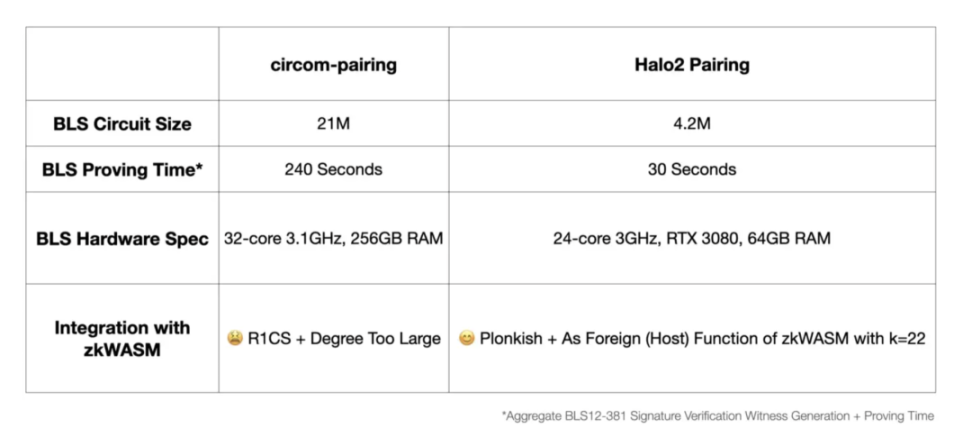

Taking Hyper Oracle as an example, for proving the BLS signature, Halo 2 was chosen. They chose Halo 2 instead of Circom, which is used by Succinct Labs, for several reasons:

Both Circom and Halo 2 can generate zero-knowledge proofs of BLS signatures (BLS 12 – 381 elliptic curves).

Hyper Oracle is not just doing zkPoS, its core product is a programmable on-chain zero-knowledge oracle (Programmable Onchain zkOracle). Among them, zkGraph, zkIndexing, and zkAutomation are directly oriented to users, and the zkWASM virtual machine is also used to verify off-chain calculations. Although Circom is easier for engineers to use, it is less compatible and cannot ensure that the logic of all functions can be used

Circom-pairing will be compiled into R 1 CS, which is not compatible with the Plonkish constraint system of zkWASM and other circuits, and Halo 2 Pairing circuit can be easily integrated into zkWASM circuit; in contrast, R 1 CS is for batch proof ( Proof Batching) is also not ideal.

From an efficiency perspective, the BLS circuit generated by Halo 2-pairing is smaller, the proof time is shorter, the hardware requirements are lower, and the gas fee is also lower.

https://mirror.xyz/hyperoracleblog.eth/lAE9erAz5eIlQZ346PG6tfh7Q6xy59bmA_kFNr-l6dE

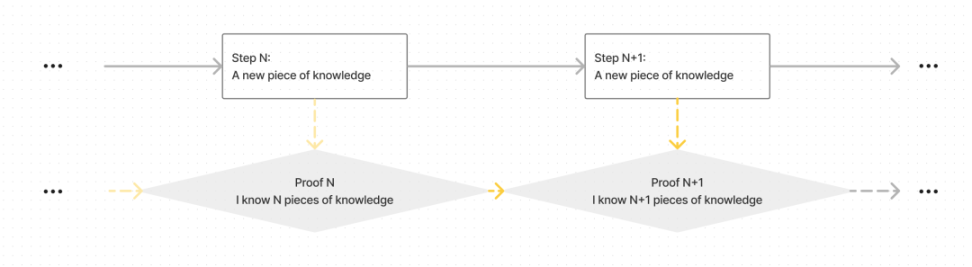

Another key point of using zero-knowledge to prove the consensus layer is recursive proof—that is, proofs of proofs, which packs what happened before into a proof.

If there is no recursive proof, a proof of O(block height) size will eventually be output, that is, each block attestation (block attestation) and the corresponding zkp. With recursive proofs, we only need proofs of size O(1) for any number of blocks other than initial and final states.

Verify Proof N and Step N+ 1 to get Proof N+ 1, i.e. you know N+ 1 pieces of knowledge, instead of verify all N Steps separately.

Going back to the original goal, our solution should target light clients with compute and memory constraints. Even if each proof can be verified in a fixed amount of time, if the number of blocks and proofs accumulates, the verification time will become very long.

3. Ultimate Goal: Diversified Level 1 zkEVM

The goal of Ethereum is not only to prove the consensus layer, but also to realize the zero-knowledge of the entire Layer 1 virtual machine through zkEVM, and finally realize a variety of zkEVMs to enhance the decentralization and robustness of Ethereum.

To address these issues, Ethereums current solutions and roadmap are as follows:

lightweight - smaller memory, storage and bandwidth requirements

Currently, only the block header is stored and verified through light nodes.

Future development will also require further efforts in verkle tree and stateless clients, involving improvements to the mainnet data structure.

Safe trustless trustless - to achieve the same minimum trust as the full node (trust-minimization)

At present, the basic light node consensus layer, namely Sync Committees, has been implemented, but this is only a transitional solution.

Use SNARK to verify Ethereum Layer 1, including verifying the Verkle Proof of the execution layer, verifying the consensus layer, and SNARKing the entire virtual machine.

Level 1 zkEVM is used to realize zero-knowledge of the entire Ethereum Layer 1 virtual machine and realize the diversification of zkEVM.

possible risks

Ideally, when we enter the zk era, we need multiple open source zkEVMs - different clients have different zkEVM implementations, and each client waits for a proof of compatibility with its own implementation before accepting a block.

However, multiple proof systems may face some problems, because each proof system requires a peer-to-peer network, and a client that only supports a certain proof system can only wait for the corresponding type of proof before being verified by its verifier. identified. The two main challenges that may arise include latency challenge and data inefficiency. The former is mainly due to the slow generation of proofs, and there is a time gap when generating proofs for different proof systems. Leave it to the perpetrators to create temporary forks; the latter has to save the original signature because you want to generate multiple types of zk proofs. Although in theory the advantage of zkSNARK itself is that it can delete the original signature and other data, there are some contradictions here. Optimize and solve.

4. Future Outlook

To allow web3 to welcome more users, provide a smoother experience, create higher usability, and ensure application security, we must build infrastructure for decentralized data access, off-chain computing, and on-chain verification.

The proof consensus layer is an important part of it. In addition to Ethereum PSE and the aforementioned zkEVM layer 2, there are also some protocols that are using zero-knowledge proof consensus to achieve their own application goals, including Hyper Oracle (Programmable zkOracle Network ) plans to use zero-knowledge to prove the entire consensus layer of Ethereum PoS to obtain data; Succinct Labs Telepathy is a light node bridge (Light Node Bridge), which can achieve the purpose of cross-chain communication by verifying the Sync Committee consensus and submitting state validity proof ; Polyhedra was originally a light node bridge, but now it also states that it uses devirgo to achieve full node full consensus zk proof.

In addition to cross-chain security and decentralized oracles, this method of off-chain computing + on-chain verification may also participate in fraud proof in optimistic rollup and integrate with OP L2; or in an intent-based architecture (intent -based architecture), providing on-chain proofs for more complex intent structures, etc.

Here we are talking about not only the off-chain ecosystem surrounding Ethereum, but also the broader market beyond Ethereum.

There are still many parts worthy of in-depth research on this topic. For example, on August 24 last week, a16z published an article that stateless blockchain (stateless blockchain) cannot be reached, and another example is weak subjectivity checkpoints. (weak subjectivity checkpoints), Sync Committee security is mathematically sufficient and so on.

Thanks again for your advice and feedback, Alex @ IOBC (@looksrare_eth), Fan Zhang @ Yale University (@0x FanZhang), Roy @ Aki Protocol (@aki_protocol), Zhixiong Pan @ ChainFeeds (@nake 13), Suning Yao @ Hyper Oracle (@msfew_eth), Qi Zhou @ EthStorage (@qc_qizhou), Sinka @ Delphinus (@DelphinusLab), Shumo @ Manta (@shumochu)