As artificial intelligence crosses the Turing threshold, blockchain reconstructs the trust structure, and data itself is quietly replacing energy and becoming the core resource for a new round of civilizational leaps. However, in this huge technological process, we have just realized that an old question is making a comeback: Can humans still have true privacy?

The greatest enemy of knowledge is not ignorance, it is the illusion of knowledge. ——Stephen Hawking

Privacy computing was born. It is not a mutation of an isolated technology, but the result of a long-term game between cryptography, distributed systems, artificial intelligence and human values. Since the theoretical frameworks such as multi-party secure computing and homomorphic encryption were proposed in the late 20th century, privacy computing has gradually evolved into a key guardrail to cope with the era of out-of-control data - it allows us to collaborate on computing, share value and rebuild trust without exposing the original data.

This is a piece of technological history that is still happening, and it is also a philosophical question about whether free will can survive in the algorithmic world. Privacy computing may be the first door to the answer.

1. The embryonic stage (1949–1982): the starting point of modern cryptography

1949: Shannon and the beginning of information theory

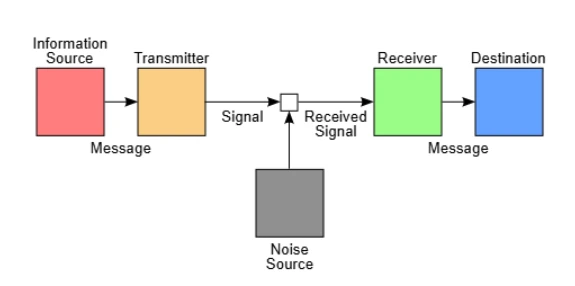

In 1949, Claude Shannon systematically analyzed the security of communication systems for the first time using rigorous mathematical methods in his paper A Theory of Communication Systems. He used information theory to define perfect confidentiality and pointed out that only one-time pads can meet this strict security requirement. Shannons research method is extremely rigorous. Starting from mathematical principles, he used probability theory and statistical methods to establish a confidentiality system model. He clarified the concepts of entropy and information volume, laying the theoretical foundation for modern cryptography.

The classic communication model proposed by Shannon in his 1948 paper A Mathematical Theory of Communication shows the relationship between the information source, encoder, channel, decoder and information receiver.

Shannons research process was creative. During his time at Bell Labs, he not only devoted himself to theoretical research, but also conducted a large number of experimental verifications. He was accustomed to using intuitive analogies to explain complex concepts, such as comparing information transmission to water flow and entropy to uncertainty. According to anecdotes, he also rode a unicycle in the laboratory corridor to relax and stimulate creativity. This paper had a far-reaching impact, not only laying the theoretical foundation for modern cryptography, but also affecting the research path of the entire information security field in the following decades.

1976: Diffie-Hellman Breakthrough

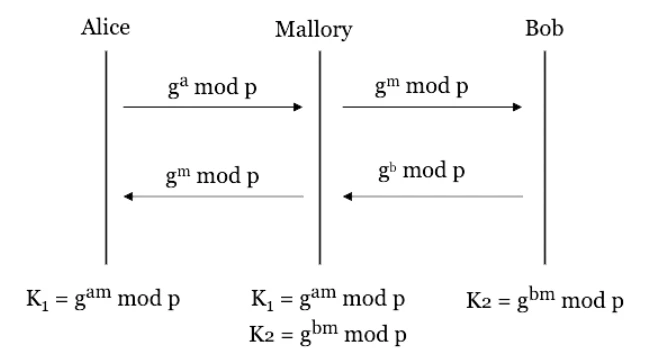

In 1976, Whitfield Diffie and Martin Hellman proposed the concept of public key encryption in the paper New Directions in Cryptography and designed the Diffie-Hellman key exchange protocol. The research method of this protocol is based on the mathematical difficulty of the discrete logarithm problem. The two proposed for the first time a scheme for securely exchanging keys without pre-sharing secrets.

This figure shows the basic process of Diffie-Hellman key exchange. Alice and Bob exchange information through a public channel and eventually generate a shared key without being known by eavesdroppers.

The research process was not smooth sailing. The two spent many years trying and failing, and finally found a suitable mathematical structure based on in-depth research in mathematics and number theory. Diffie also caused a lot of anecdotes in the academic community because of his unique appearance and behavior. For example, conference organizers often mistakenly identified him as a member of a rock band. This work fundamentally changed the field of cryptography and laid the foundation for digital signatures and other modern security protocols.

1977: RSA algorithm is born

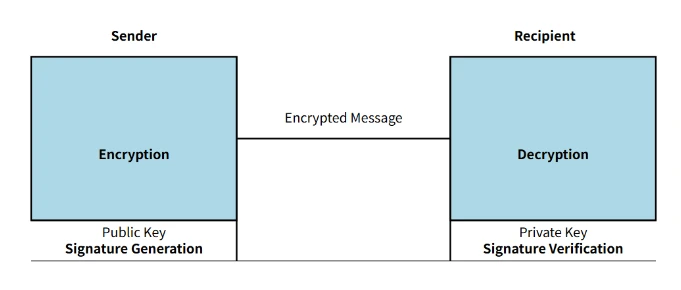

In 1977, Ron Rivest, Adi Shamir, and Leonard Adleman of MIT jointly invented the famous RSA algorithm, which is the first practical asymmetric encryption algorithm. Their research method uses the large prime number factorization problem as the security foundation, and successfully constructs a practical asymmetric encryption mechanism through complex mathematical derivation and computer experiments.

This diagram shows the RSA encryption and decryption process, including the generation of public and private keys, and how to use the public key to encrypt and the private key to decrypt information.

During the research process, Rivest initially had a sudden inspiration after a whole night of trying, and quickly completed the algorithm draft; while Adleman was responsible for the algorithm implementation and verification. They encrypted a famous message with the RSA algorithm and challenged their colleagues to decrypt it to commemorate this important moment. The RSA algorithm is not only of theoretical significance, but more importantly, it provides a practical security solution for application scenarios such as e-commerce and digital signatures.

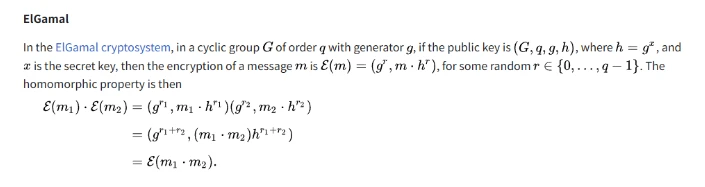

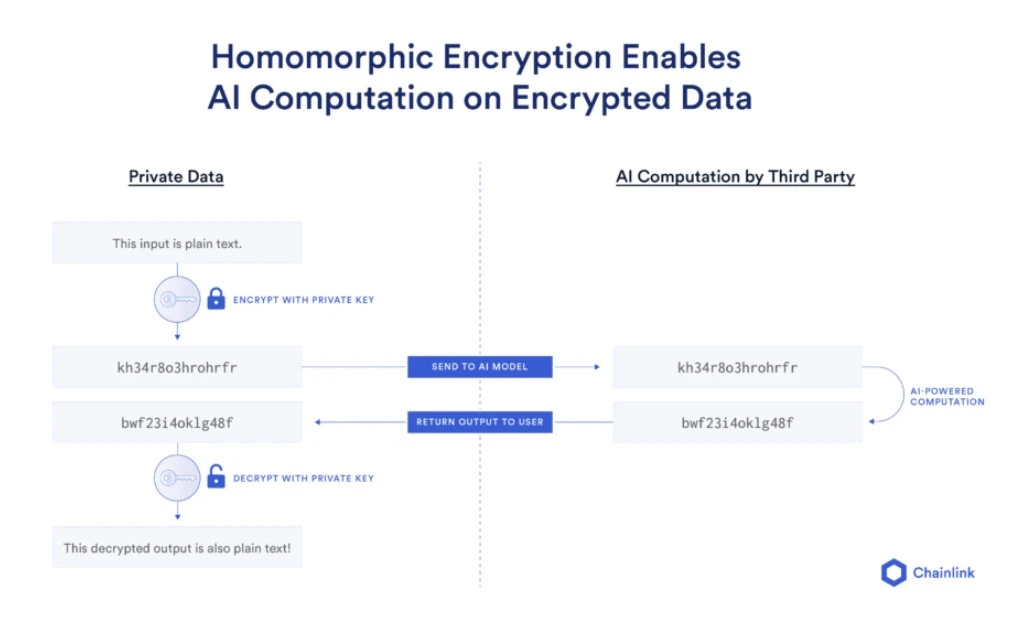

1978: The Beginning of Homomorphic Encryption

In 1978, after proposing the RSA algorithm, Rivest, Shamir, and Adleman explored the computational possibilities of encrypted data, namely the concept of homomorphic encryption. When they raised the question, they simply pointed out whether an encryption system could allow ciphertext to be directly operated without decryption. Although they failed to solve this problem at the time, the forward-looking question they raised triggered continuous exploration in the cryptography community for more than 30 years.

This figure shows the concept of homomorphic encryption, which is to operate directly on the ciphertext, and the result obtained after decryption is consistent with the result of the operation on the plaintext.

1979–1982: Cryptography blossoms

During this period, the field of cryptography has seen many groundbreaking theoretical research results. Adi Shamir proposed a secret sharing method that, through a sophisticated algebraic structure design, enables secret information to be securely managed by multiple parties, and the secret can only be reconstructed when a certain number of people participate.

An infinite number of quadratic polynomials can be drawn from 2 points. Three points are required to uniquely identify a quadratic polynomial. This diagram is for reference only - Shamirs solution uses polynomials over a finite field, which is not easily representable in 2D.

Michael Rabin proposed Oblivious Transfer in his research. This protocol cleverly solves the trust problem between the two parties in information exchange, allowing one party to obtain information securely while the other party cannot know the specific content.

In 1982, Yao Qizhi proposed the famous Millionaires Problem, which vividly and humorously presented complex cryptographic ideas and described how to safely determine who is richer without exposing specific information about each persons wealth. Yao Qizhis research method used abstract logical deduction and game theory methods. This research directly inspired the subsequent research boom of secure multi-party computing (MPC) and promoted the widespread application of cryptography in information sharing, data privacy protection and other fields.

These rich and in-depth research results have jointly promoted the initial prosperity of the field of modern cryptography and laid a solid foundation for future technological evolution.

2. Exploration Period (1983–1999): Theoretical Explosion and Prototype of Privacy Tools

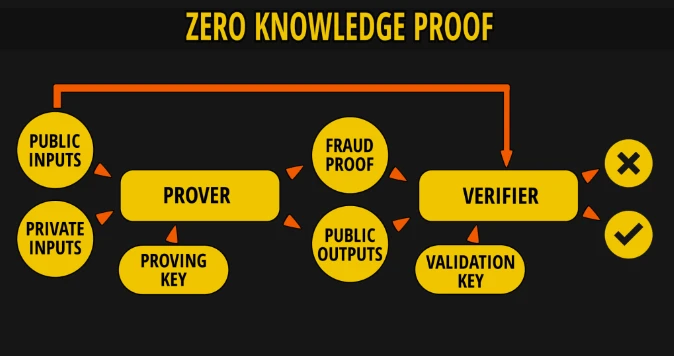

1985: The birth of zero-knowledge proofs

In 1985, Shafi Goldwasser, Silvio Micali, and Charles Rackoff of MIT proposed the concept of Zero-Knowledge Proof (ZKP). Based on their research on interactive proof systems, they raised the question of how to prove that one knows a secret without revealing the secret itself. They used complex interactive protocols and probabilistic methods to design a proof process that allows the verifier to believe that the person providing the proof does have certain information, but at the same time cannot infer the specific details of the information.

This figure shows the basic process of zero-knowledge proof. The prover proves to the verifier that he has a certain secret information, but does not disclose the information itself in the process.

An interesting anecdote is that the inspiration for this research originally came from a simple card game. They demonstrated zero-knowledge proof in an interactive game at an academic conference, which surprised and confused the audience. The proposal of ZKP not only provides important theoretical tools for the field of modern cryptography, but also lays an important technical support for privacy computing, and has a profound impact on subsequent fields such as anonymous verification, privacy protection, and blockchain.

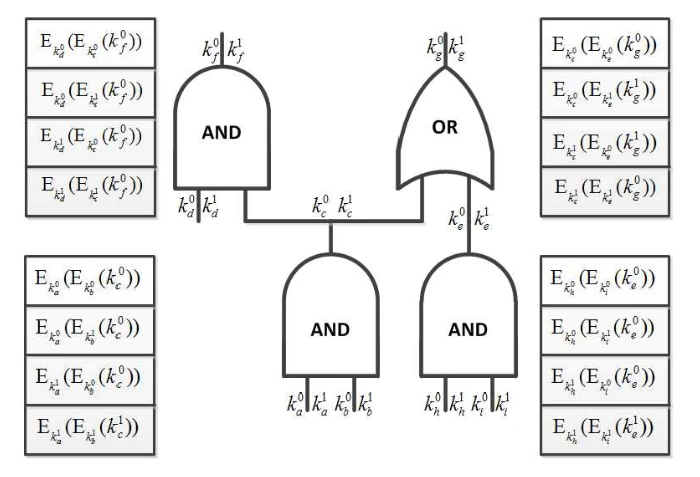

1986-1987: Breakthrough in secure multi-party computation

In 1986, Professor Yao Qizhi proposed the garbled circuit technology, which cleverly implemented a two-party secure computing protocol. He converted the function calculation into a complex circuit, then garbled the circuit and handed it over to the other party to execute, ensuring that the other party could calculate the result but could not peek at the specific input data. Yao Qizhis research method combines cryptography with computational complexity theory and is regarded as a breakthrough in computer science.

This figure shows the basic structure of the Garbled Circuit technology proposed by Yao Qizhi, demonstrating how to achieve two-party secure computing through circuit obfuscation

An interesting fact is that Yao Qizhis unique interdisciplinary background often allows him to approach problems from unexpected angles, and he is jokingly called the black magic of cryptography by the academic community. Soon after, in 1987, Oded Goldreich, Silvio Micali, and Avi Wigderson proposed the famous GMW protocol, which extended secure multi-party computing (MPC) to multi-party scenarios, enabling multiple participants to perform calculations securely without exposing their input data. These achievements have substantially promoted the theoretical and practical development of secure multi-party computing, making privacy computing gradually move from theory to possibility.

1996: Early ideas for federated learning

In 1996, David W. Cheung proposed an association rule mining algorithm for distributed systems. Although he did not explicitly propose the term federated learning at that time, the core idea of his research coincided with the popular federated learning idea later. The distributed data mining method he proposed does not require all data to be centralized, but allows multiple independent data owners to process data and exchange mining results.

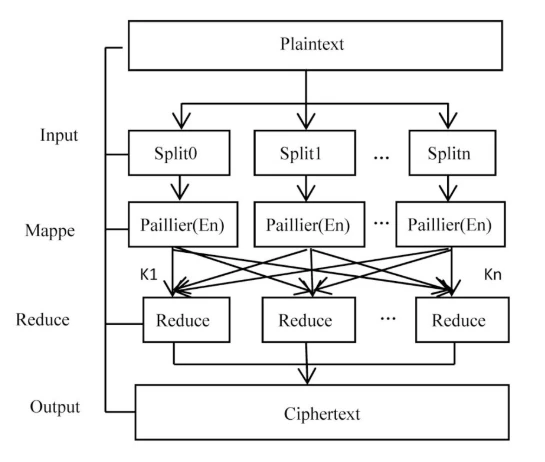

This figure shows the parallel encryption process of the Paillier encryption scheme, illustrating how addition operations are performed in the ciphertext state.

Cheungs research process is also quite interesting. He originally wanted to solve the problem of data mining within large enterprises, but he accidentally provided a theoretical prototype for future federated learning. His research did not attract widespread attention at the time. It was not until more than 20 years later, as privacy issues became increasingly prominent, companies such as Google began to adopt federated learning on a large scale, and this achievement truly showed its foresight.

1999: Additive homomorphic encryption becomes practical

In 1999, Pascal Paillier proposed a new additive homomorphic public key encryption scheme that allows ciphertext to be directly added without decryption. This research solved the long-standing bottleneck in the practical application of homomorphic encryption. He used mathematical constructions based on group theory to achieve secure additive homomorphic properties, allowing data to be effectively processed in the ciphertext state, significantly promoting the actual implementation of encrypted data application scenarios.

The Paillier scheme is widely used in practical scenarios such as electronic voting and privacy-preserving data analysis. It is reported that Pailliers initial inspiration for designing the scheme came from solving the privacy problem in anonymous electronic voting. This demand-driven research method not only has theoretical significance, but also greatly promotes the industrial development of homomorphic encryption technology.

During this period, cryptographic theory flourished and various novel technologies and methods emerged, laying a solid foundation for the vigorous development of privacy computing and providing rich tools and methods for future technological applications.

3. Growth period (2000–2018): The privacy computing framework takes shape

2006: The Pioneering of Differential Privacy

In 2006, Cynthia Dwork of Microsoft Research proposed the theory of differential privacy, an innovative theory that provides strict mathematical guarantees for data privacy protection in the big data era. Dworks research method cleverly uses random noise to add to data query results, so that the impact of adding or deleting a single data record on the overall query results is minimized, thereby effectively protecting the privacy of individual data.

This figure shows the basic mechanism of differential privacy, which protects the privacy of individual data by adding random noise to the query results.

Interestingly, when Dwork was promoting differential privacy in the early days, she used a phone book as an example to vividly illustrate the real risks of privacy leakage. She pointed out that although the anonymized data seems safe, sensitive privacy can still be restored by combining external information. Her research has pioneered a new standard for data privacy protection and has been widely used in medical data, demographics, social science research and other fields, profoundly affecting the development path of subsequent privacy computing technology.

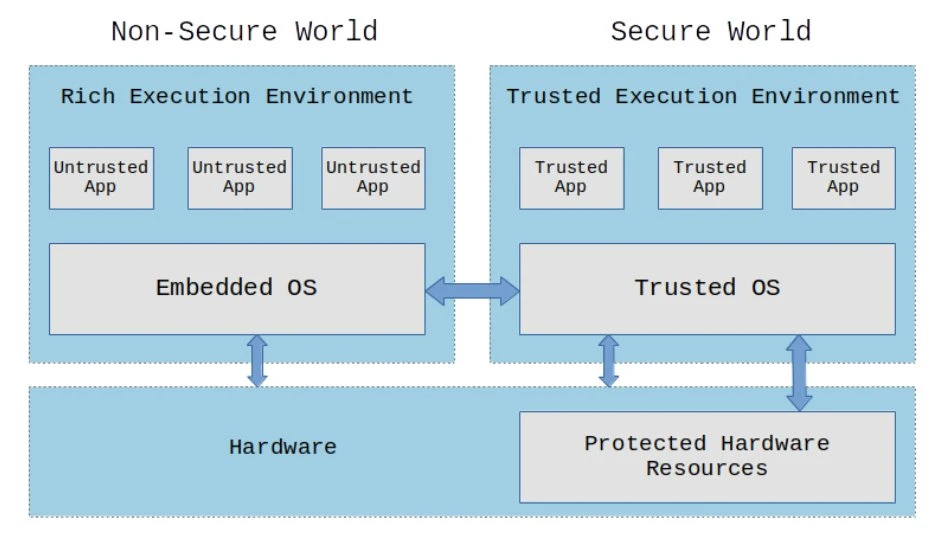

2009: Breakthrough in Fully Homomorphic Encryption and Trusted Execution Environment (TEE)

2009 was a critical year for the development of cryptography technology. First, the Open Mobile Terminal Platform (OMTP) first proposed a predecessor to the Trusted Execution Environment (TEE), attempting to build an isolated security environment at the hardware level to protect the security of sensitive data on mobile devices.

This figure shows the basic architecture of TEE, illustrating how to create an isolated execution environment at the hardware level to protect the security of sensitive data.

In the same year, Craig Gentry of IBM proposed the first Fully Homomorphic Encryption (FHE) scheme. This theory allows data to be arbitrarily calculated in an encrypted state, and the processing results can be obtained without decryption. Gentrys research is legendary. It is said that after years of research, he got a sudden inspiration during a walk and finally solved the problem that has plagued the academic community for decades. His breakthrough opened a new chapter in ciphertext computing, enabling encrypted data to be safely and efficiently used in cloud computing, financial data analysis and other scenarios.

This figure shows the workflow of fully homomorphic encryption, explaining how to perform arbitrary calculations in the ciphertext state and obtain the correct result after decryption.

2013: Pioneer in Federated Learning for Healthcare

In 2013, Professor Wang Shuangs team took the lead in proposing the medical federated learning system EXPLORER in the field of privacy computing. This was the first practice of implementing secure model training on distributed medical data. The team used a combination of distributed machine learning and privacy protection technology to design a model training method that can collaborate across institutions without sharing original data.

The original motivation for this research was reportedly due to the difficulty of data sharing in actual medical research. During the research process, the team went through multiple technical iterations and interdisciplinary collaborations, and finally successfully built a technical framework that can safely share medical data without leaking patient privacy. The EXPLORER system quickly became a benchmark for international medical data security protection and promoted new practices in global medical data collaboration.

2015–2016: The Industrialization of Privacy Computing

From 2015 to 2016, privacy computing reached a milestone in industrialization. Intel released the first commercial trusted execution environment technology, SGX (Software Guard Extensions), which allows applications to be executed in isolation at the hardware level to ensure the security of sensitive data and programs. This breakthrough has enabled privacy protection to enter real application scenarios, such as secure cloud computing and financial transactions.

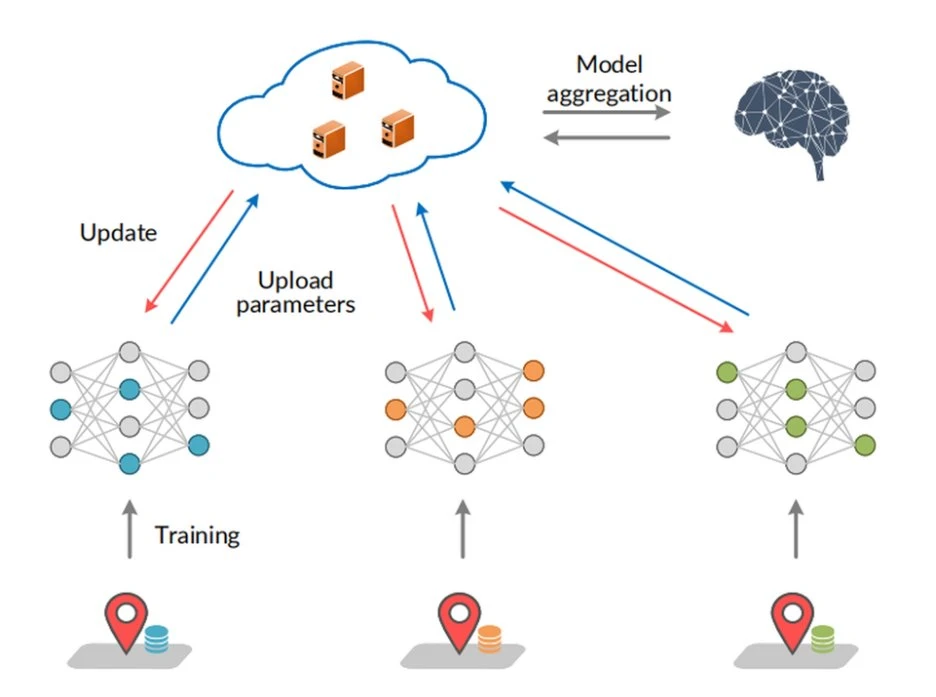

At the same time, the Google AI team proposed the Federated Learning framework in 2016, integrating the concept of privacy computing directly into mobile terminal applications. Federated learning allows data to be trained locally on the device and then the model parameters are centrally integrated without directly uploading sensitive data. This method not only improves data security, but also significantly enhances user privacy protection capabilities.

This figure shows the basic process of federated learning, illustrating how to achieve distributed machine learning through local model training and parameter aggregation without sharing the original data.

Interestingly, the Google Federated Learning Project was originally codenamed “Project Bee”, implying that multiple terminals contribute separately like bees and converge into an overall intelligent model. This vivid metaphor also shows how privacy computing technology can move from abstract theory to widespread application in real life.

IV. Application period (2019–2024): Privacy computing becomes practical

2019: Federated Transfer Learning and the Birth of the FATE System

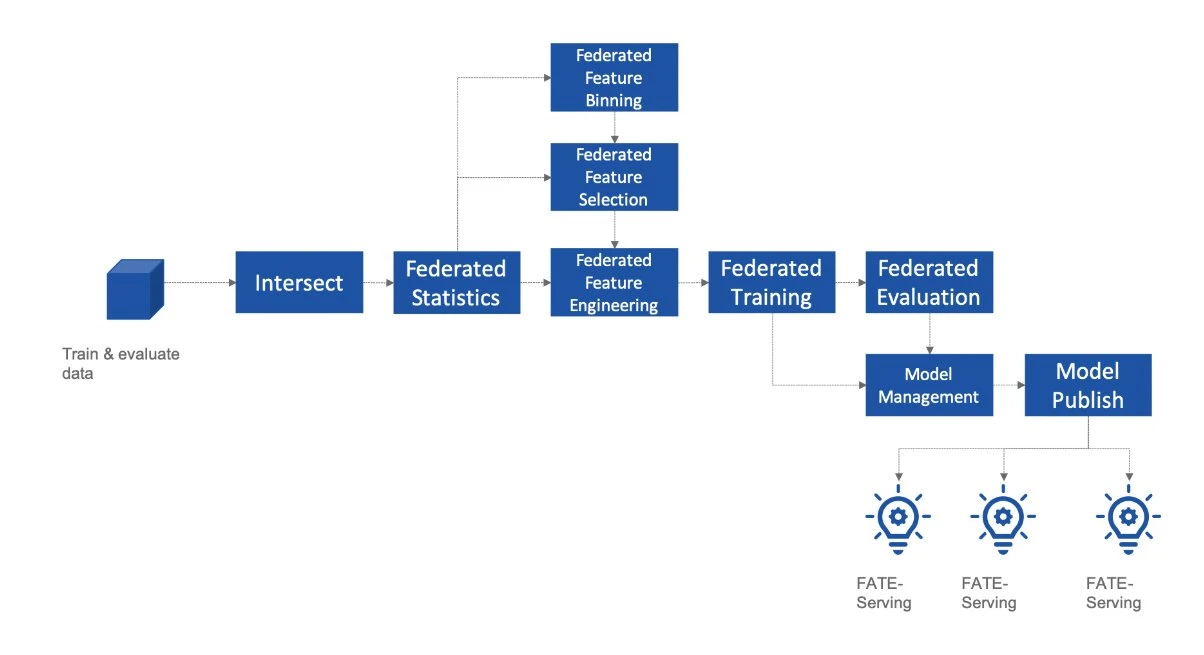

In 2019, Professor Qiang Yang and his team at the Hong Kong University of Science and Technology officially launched the theory of Federated Transfer Learning and the open source system FATE (Federated AI Technology Enabler), which greatly promoted the industrialization and engineering progress of federated learning technology. The research of Qiang Yangs team innovatively combines federated learning with transfer learning, enabling data from different fields and scenarios to achieve model migration and knowledge sharing without sharing the original data, thus solving the problem of data silos.

This figure shows the architecture of the FATE system and explains how to achieve cross-institutional data collaboration and model training through federated learning.

An interesting anecdote is that at the official open source release conference of the FATE system, the number of participants far exceeded expectations due to the industrys high attention to this technology, so that the organizer had to temporarily change to a larger venue. This anecdote also reflects the industrys great interest and enthusiasm in the implementation of federated learning.

The advent of the FATE system not only promoted the rapid implementation of federated learning technology, but also provided a technical framework for subsequent data cooperation. It was quickly applied to practical scenarios such as financial risk control, medical diagnosis, and smart government affairs, providing a substantial solution for data sharing and privacy protection.

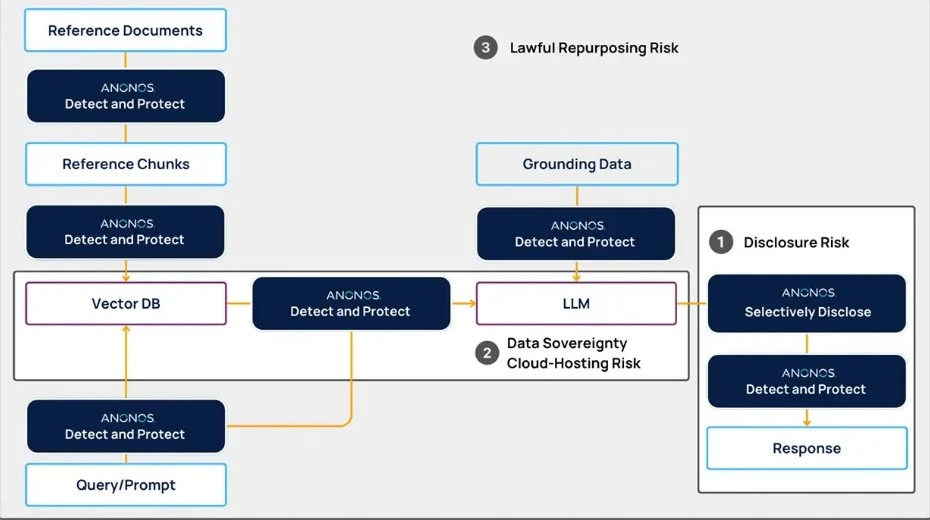

Privacy computing becomes data infrastructure

With the advent of the data age, the data factor market has developed rapidly, and data security and privacy protection have gradually become core issues of concern to various industries. At the same time, data compliance requirements in various countries continue to increase, prompting privacy computing technology to quickly integrate with important industries such as finance, medical care, and government affairs.

In the financial sector, privacy computing technologies such as homomorphic encryption (FHE), secure multi-party computing (MPC), and zero-knowledge proof (ZKP) are widely used in risk control and anti-fraud scenarios to ensure data security and compliance. In the medical field, differential privacy (DP) and federated learning (FL) technologies effectively protect patient privacy and promote cross-institutional medical research and collaboration.

The government sector actively adopts Trusted Execution Environment (TEE) technology to build a secure and efficient government data processing platform to ensure the security of sensitive information and public trust. The coordinated use of these technologies has greatly increased the speed of data value release and improved data sharing efficiency and security.

Technology collaboration and industry integration

At the current stage, various core technologies in the field of cryptography, including zero-knowledge proof (ZKP), secure multi-party computing (MPC), fully homomorphic encryption (FHE), trusted execution environment (TEE), differential privacy (DP) and federated learning (FL), are gradually moving towards a collaborative and symbiotic development path. This technological integration not only enriches the means of privacy protection, but also improves the overall practicality and reliability of privacy computing.

Privacy computing is no longer a theoretical research stage, but has truly become a widely used infrastructure that supports the secure circulation and value sharing of data elements, forming a new ecosystem of privacy computing + industry. In the future, privacy computing technology will be further integrated into the digital transformation of various industries, becoming an important engine to promote industrial innovation, economic development and social progress.

These rich theoretical explorations and technology implementation cases mark a new height in which cryptography and privacy computing have truly moved from theoretical research to practical application, and also lay a solid foundation for the development of high-performance cryptographic applications.

5. Cryptography is now accessible to everyone (2025 to present): ZEROBASE

2025: The rise of ZEROBASE and inclusive privacy computing

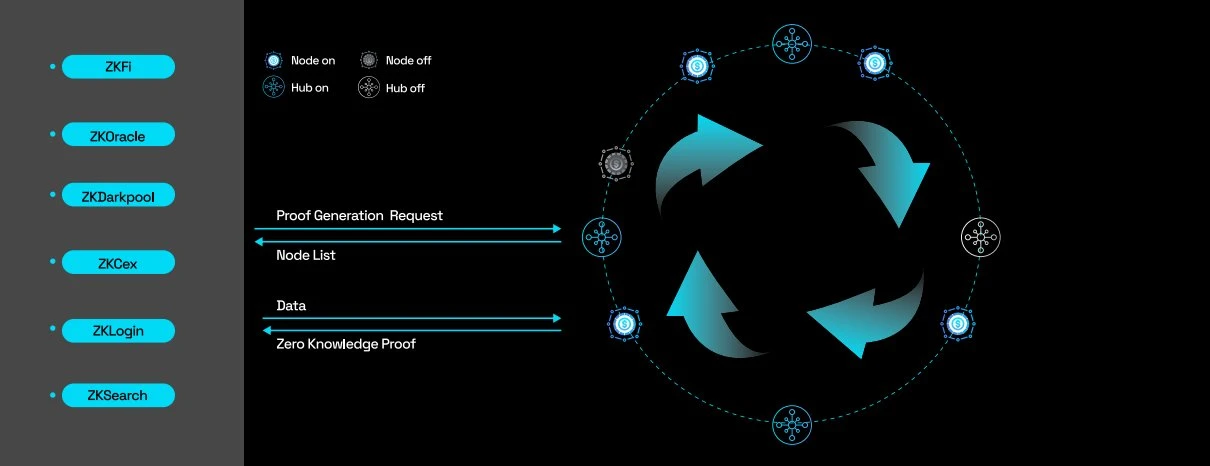

In the ZEROBASE network, nodes are divided into different subsets, each of which is managed by a HUB. Each HUB only records the status information of the NODE it is responsible for, which means that the NODE only communicates with its corresponding HUB. In this architecture, even if the number of NODEs increases, the system can continue to run smoothly by adding more HUBs.

In 2025, privacy computing has entered a new stage - the ZEROBASE stage. The ZEROBASE project has brought privacy computing into the daily lives of ordinary users in its unique way. The vision of ZEROBASE is to build a global zero-knowledge (ZK) trust infrastructure to support the implementation of privacy computing applications in multiple fields such as finance, government affairs, and healthcare.

ZEROBASE adopts a decentralized and easy-to-use architecture to lower the technical threshold for users to participate in privacy computing. It redesigned the trusted setup process of the zero-knowledge proof (ZKP) system and completed the trusted setup for the first time in the form of a browser combined with an image entropy source.

ZEROBASEs Guinness World Records Cryptography Challenge warm-up event page

In early 2025, ZEROBASE launched a Guinness World Record challenge and successfully attracted more than 564,000 global users to participate in the warm-up activities on OKX Wallet during the warm-up stage.

ZEROBASE: Technological innovation and social consensus

ZEROBASE presents complex technologies in a user-friendly interactive way. With simple image uploads and browser operations, highly complex trusted settings can be implemented. This design concept not only lowers the threshold for participation, but also turns ZK technology into a social activity, greatly improving the publics awareness and acceptance of privacy computing technology.

On the technical level, ZEROBASE has developed a highly optimized ZKP circuit that achieves a proof generation rate of more than 1,000 times per second, which has enabled ZEROBASE to quickly take the lead in the ZK proof market. At the same time, ZEROBASE has also launched a number of innovative applications, including zkLogin (frictionless Web3 authentication), zkCEX (a hybrid exchange model with on-chain order matching and off-chain settlement), and zkStaking (a collateral system that verifies arbitrage risks and optimizes revenue generation).

The significance of ZEROBASEs Guinness World Records attempt

The Guinness World Records challenge initiated by ZEROBASE is not only a technological breakthrough, but also a transformation of social narrative. Unlike the traditional trusted setup ceremony, ZEROBASEs challenge has transformed privacy computing from a mysterious black box into an open, transparent and participatory social event. Through popular participation and an open and transparent verification process, ZEROBASE has effectively solved the long-standing trust and consensus problems of ZK technology.

I really like Stephen Hawking, and at ZEROBASE you can drive Hawking around, making him spin and jump. Oh, Im the smartest person in the world! Lets take a ride on a flying wheelchair that goes 70 miles per hour - driven by Hawking himself!

This Guinness World Records challenge not only proves that privacy computing technology can achieve large-scale public participation, but also shows the importance of technology inclusion and social consensus. This clearly points out that the future of privacy computing technology does not lie in complex technology stacking, but in whether it can provide ordinary users with a barrier-free way to participate.

Playground entrance: https://zerobase.pro/playground/index.html

Future goal: Build a global privacy computing infrastructure

As privacy computing gradually moves out of the laboratory, it is quietly reshaping the underlying order of human collaboration. In the future, we may no longer need to worry about every data sharing, because privacy protection will become the default basic right of the system; a farmer can use privacy algorithms to predict climate change, a community can manage public affairs in DAO without intermediaries, and a patient in a remote area can also use trusted AI to safely share medical records and obtain the worlds best solution.

In the more distant future, perhaps we will also witness privacy computing providing the last line of defense for civilization in the quantum era and becoming a security star for the trusted operation of the digital society.

Looking back today, this revolution may have just begun. But just as the Internet has spread from protocols to the world, privacy computing will eventually spread to every ordinary person.

What is truly worth remembering is not a particular technological leap, but the way we choose together to move towards a freer, safer and more trustworthy digital civilization.

Reference

[ 1 ] Shamir A. How to share a secret. Commun ACM. 1979 Nov;22(11): 612 – 3.

[2] Diffie W, Hellman M. New directions in cryptography. IEEE Trans Inf Theory. 1976 Nov;22(6): 644 – 54.

[3] Calderbank. The rsa cryptosystem: history, algorithm, primes. Chicago: math uchicago edu [Internet].

[ 4 ] Yao AC. Protocols for secure computations. In: 23rd Annual Symposium on Foundations of Computer Science (sfcs 1982). 1982. p. 160 – 4.

[5] Shannon CE. Communication theory of secrecy systems. The Bell System Technical Journal. 1949 Oct;28(4): 656 – 715.

[ 6 ] Blakley. Safeguarding cryptographic keys. In: Managing Requirements Knowledge, International Workshop on. 1979. p. 313.

[ 7 ] Rabin MO. How To Exchange Secrets with Oblivious Transfer. Cryptology ePrint Archive [Internet]. 2005 [cited 2022 May 28];

[8] Rivest RL, Adleman L, Dertouzos ML. On data banks and privacy homomorphisms. Foundations of secure [Internet]. 1978;

[9] Goldwasser S, Micali S, Rackoff C. The knowledge complexity of interactive proof-systems [Internet]. Proceedings of the seventeenth annual ACM symposium on Theory of computing - STOC 85. 1985.

[ 10 ] Yao ACC. How to generate and exchange secrets. In: 27th Annual Symposium on Foundations of Computer Science (sfcs 1986). 1986. p. 162 – 7.

[ 11 ] Goldreich O, Micali S, Wigderson A. How to Play ANY Mental Game. In: Proceedings of the Nineteenth Annual ACM Symposium on Theory of Computing. New York, NY, USA: ACM; 1987. p. 218 – 29. (STOC 87).

[12] Diffie W, Hellman M. New directions in cryptography. IEEE Trans Inf Theory. 1976 Nov;22(6): 644 – 54.

[ 13 ] Paillier P. Public-Key Cryptosystem Based on Discrete Logarithm Residues. EUROCRYPT 1999 [Internet].

[ 14 ] Dwork C. Differential Privacy. In: Automata, Languages and Programming. Springer Berlin Heidelberg; 2006. p. 1 – 12.

[ 15 ] Hardware Working Group. ADVANCED TRUSTED ENVIRONMENT: OMTP TR 1 [Internet]. OMTP Limited; 2009.

[ 16 ] Gentry C. A fully homomorphic encryption scheme [Internet]. 2009. Available

[ 17 ] Wang S, Jiang X, Wu Y, Cui L, Cheng S, Ohno-Machado L. EXpectation Propagation LLogistic REgRession (EXPLORER): distributed privacy-preserving online model learning. J Biomed Inform. 2013 Jun;46(3): 480 – 96.

[ 18 ] Gao D, Liu Y, Huang A, Ju C, Yu H, Yang Q. Privacy-preserving Heterogeneous Federated Transfer Learning. In: 2019 IEEE International Conference on Big Data (Big Data). 2019. p. 2552 – 9.

[19] Koneny J, Brendan McMahan H, Yu FX, Richtárik P, Suresh AT, Bacon D. Federated Learning: Strategies for Improving Communication Efficiency [Internet]. arXiv [cs.LG]. 2016.

[ 20 ] Intel. Intel Architecture Instruction Set Extensions Programming Reference [Internet]. Intel; 2015.