Original author: Hill

This article is original by the SevenX research team and is for communication and learning purposes only and does not constitute any investment reference. If you need to cite, please indicate the source.

Recently, Uniswap v4 was released. Although the functions are not yet complete, we hope that the community can extensively explore unprecedented possibilities. Considering that there may be a large number of articles introducing the huge influence of Uniswap v4 in the DeFi field, this article will explore how Uniswap v4 inspires a new blockchain infrastructure: coprocessor (Coprocessor).

Introduction to Uniswap v4

As stated in its white paper, Uniswap v4 has 4 main improvements:

Hook: A hook is an externally deployed contract that executes developer-defined logic at specified points during pool execution. Through these hooks, integrators are able to create centralized liquidity pools with flexible and customizable execution.

Singleton: Uniswap v4 adopts a singleton design pattern, in which all pools are managed by a single contract, reducing pool deployment costs by 99%.

Flash Accounting: Each operation updates an internal net balance, also called a delta, and only makes external transfers at the end of the lock. Lightning accounting simplifies complex pool operations such as atomic swaps and adds.

Native ETH: Supports WETH and ETH trading pairs.

Most of the gas savings come from the last three improvements, but without a doubt the most exciting new feature is the one mentioned at the beginning of this article: hooks.

Hooks make liquidity pools more complex and powerful

The main enhancements in Uniswap v4 revolve around the programmability of hook unlocking. This feature makes liquidity pools more complex and powerful, making them more flexible and customizable than ever before. Compared to Uniswap v3’s centralized liquidity (a net upgrade from Uniswap v2), Uniswap v4’s hooks provide a wider range of possibilities for how liquidity pools can operate.

This release can be considered a net upgrade to Uniswap v3, but this may not be the case when implemented in practice. Uniswap v3 pools are always an upgrade compared to Uniswap v2 pools because the worst upgrade you can perform in Uniswap v3 is to concentrate liquidity across the entire price range, which operates on the same principle as Uniswap v2. However, in Uniswap v4, the degree of programmability of liquidity pools may not result in a good trading or liquidity provision experience, bugs may occur, and new attack vectors may emerge. Due to the many changes in how liquidity pools operate, developers wishing to take advantage of the hook feature must proceed with caution. They need to thoroughly understand the impact of their design choices on the functionality of the pool and the potential risks to liquidity providers.

The introduction of hooks in Uniswap v4 represents a major shift in how code is executed on the blockchain. Traditionally, blockchain code is executed in a predetermined sequential manner. However, hooks allow for a more flexible execution order to ensure that certain code is executed before other code. This feature pushes complex calculations to the edge of the stack, rather than being solved in a single stack.

Essentially, hooks enable more complex calculations to be performed outside of Uniswap’s native contracts. While in Uniswap v2 and Uniswap v3, this feature could be implemented through manual calculation outside of Uniswap and triggered by external activators such as other smart contracts, Uniswap v4 integrates the hook directly into the liquidity pool’s smart contract. This integration makes the process more transparent, verifiable and trustless compared to previous manual processes.

Another benefit that hooks bring is scalability. Uniswap now no longer needs to rely on new smart contracts (requiring liquidity migration) or forks to deploy innovation. Hooks now directly implement new functionality, giving old liquidity pools a new look.

Today’s Uniswap v4 liquidity pool is the tomorrow of other dApps

I predict that more and more dApps will push computation outside of their own smart contracts like Uniswap v4.

The way Uniswap v4 works today is to allow splitting liquidity pool execution at any step, inserting arbitrary conditions, and triggering calculations outside of the Uniswap v4 contract. So far, the only similar situation is flash loans, where execution is resumed if the loan is not returned within the same block. It’s just that the calculation still happens in the flash loan contract.

The design of Uniswap v4 brings many advantages that cannot be implemented or implemented poorly in Uniswap v3. For example, it is now possible to use embedded oracles, reducing reliance on external oracles that often introduce potential attack vectors. This embedded design enhances the security and reliability of price information, which is a key factor for DeFi protocols to function.

Additionally, automation that previously had to be triggered externally can now be embedded directly into liquidity pools. This integration not only alleviates safety concerns but also resolves reliability issues associated with external triggers. In addition, it also allows liquidity pools to run more smoothly and efficiently, enhancing their overall performance and user experience.

Finally, through the hooks introduced in Uniswap v4, more diverse security features can be implemented directly in the liquidity pool. In the past, the security measures adopted by liquidity pools were mainly auditing, bug bounties, and purchasing insurance. With Uniswap v4, developers can now design and implement various failsafe mechanisms and low-liquidity warnings directly into the pool’s smart contracts. This development not only enhances the security of the pool, but also provides liquidity providers with greater transparency and control.

Compared with traditional mobile phones, the advantage of smartphones is programmability. Smart contracts have long lived in the shadow of “persistent scripts”. Now, with the advantages of Uniswap v4, the liquidity pool smart contract has received a new programmable upgrade and become smarter. I cant figure out why, given the opportunity to upgrade from Nokia to iPhone, not all dApps want to upgrade in this direction. Since Nokia is more reliable than iPhone, I can understand some smart contracts wanting to keep the status quo, but Im talking about where dApps are headed in the future.

dApps want to use their own hooks, which creates scaling issues

Imagine applying this to all other dApps, where we can insert conditions to trigger and then insert arbitrary calculations between the raw transaction sequences.

This sounds like how MEV works, but MEV is not an open design space for dApp developers. It was more like a hike into an uncharted dark forest, seeking external MEV protection at best and hoping for the best.

It is assumed that the flexibility of Uniswap v4 inspires a new generation of dApps (or upgrades from existing dApps) to adopt a similar philosophy, making their execution sequences more programmable. Since these dApps are typically deployed on only one chain (L1 or L2), we expect most state changes to run on that chain.

The additional calculations inserted during dApp state changes may be too complex and cumbersome to run on the chain. We may quickly exceed the gas limit, or it may not be possible at all. In addition, many challenges arise, especially in terms of security and composability.

Not all calculations are created equal. This is evidenced by dApps’ reliance on external protocols such as oracles and automated networks. However, this reliance may pose security risks.

To summarize the problem: integrating all calculations into state-changing smart contract execution on a single chain is far from optimal.

Solution Tip: Already Solved in the Real World

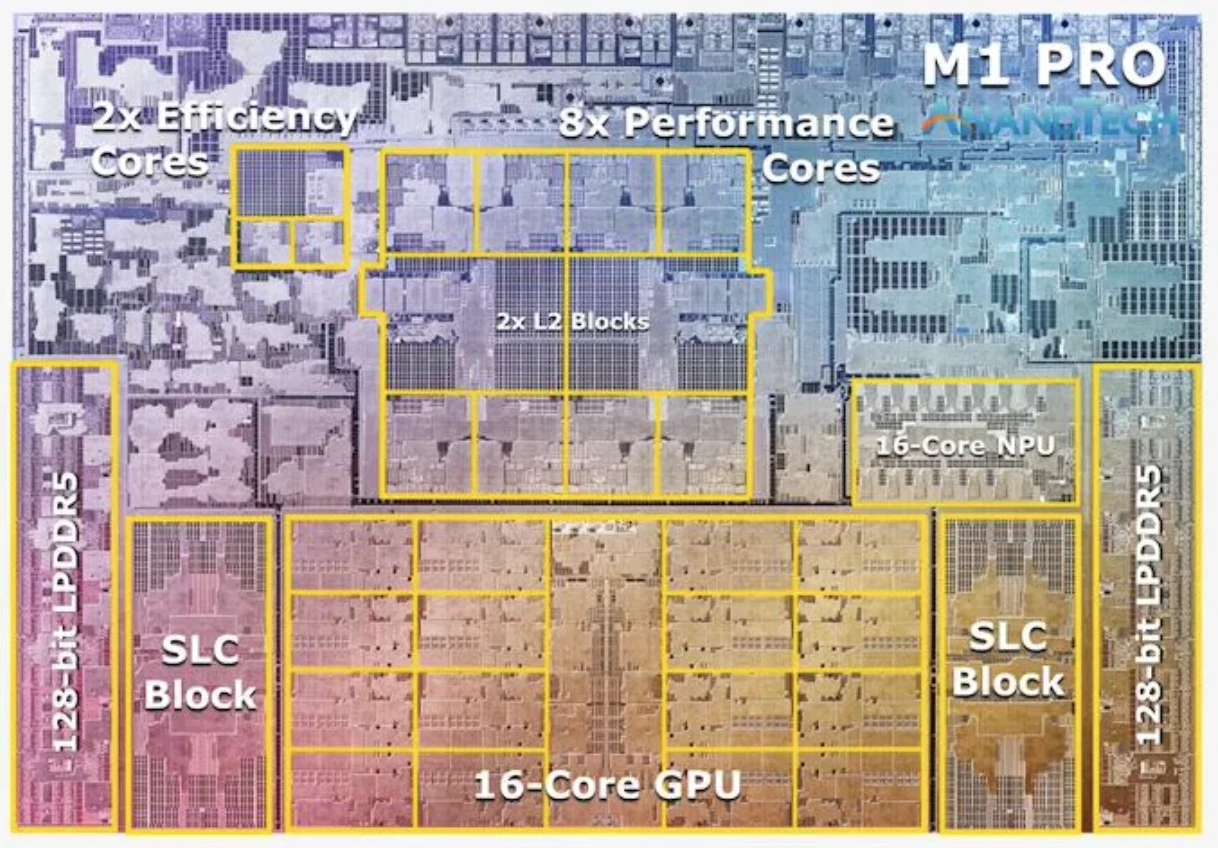

In order to solve this problem caused by the new generation of dApps (probably heavily inspired by Uniswap v4), we must delve into the core of the problem: this single chain. Blockchain operates like a distributed computer, using a single CPU to handle all tasks. On PCs, modern CPUs have made great strides in solving this problem.

Just as computers transitioned from single-core monolithic CPUs to modular designs composed of multiple efficiency cores, performance cores, GPUs, and NPUs.

dApp computing can scale in a similar way. Flexibility, optimality, security, scalability, and upgradeability can be achieved by specializing processors and combining their efforts, outsourcing some computations away from the main processor.

Practical solution

There are actually only two types of coprocessors:

external coprocessor

Embedded coprocessor

external coprocessor

External coprocessors are similar to cloud GPUs, which are easy to use and powerful, but there is additional network latency between CPU and GPU communication. Furthermore, you dont ultimately control the GPU, so you have to trust that its doing its job correctly.

Taking Uniswap v4 as an example, assuming that some ETH and USDC are added to the liquidity pool during TWAP in the last 5 minutes, if the TWAP calculation is completed in Axiom, Uniswap v4 basically uses Ethereum as the main processor and Axiom as the cooperator. processor.

Axiom

Axiom is Ethereum’s ZK coprocessor, which provides smart contracts with trustless access to all on-chain data and the ability to perform arbitrary expression calculations on the data.

Developers can query Axiom and use the on-chain zero-knowledge (ZK) verified results in a trustless manner in their smart contracts. To complete a query, Axiom performs three steps:

Reads: Axiom uses zero-knowledge proofs to trustlessly correct read data for block headers, status, transactions, and receipts in any historical Ethereum block. All Ethereum on-chain data is encoded in one of these forms, meaning Axiom can access any data that is accessible to archive nodes.

Compute: Once the data is obtained, Axiom applies proven computational primitives against it. This includes operations ranging from basic analysis (sum, count, max, min) to encryption (signature verification, key aggregation) and machine learning (decision trees, linear regression, neural network inference). The validity of every calculation will be verified in a zero-knowledge proof.

Verification: Axiom ships with a zero-knowledge validity proof for the results of each query, proving that (1) the input data has been correctly fetched from the chain, and (2) the computation has been applied correctly. This zero-knowledge proof is verified on-chain in the Axiom smart contract, and the final result is then made available to all downstream smart contracts in a trustless manner.

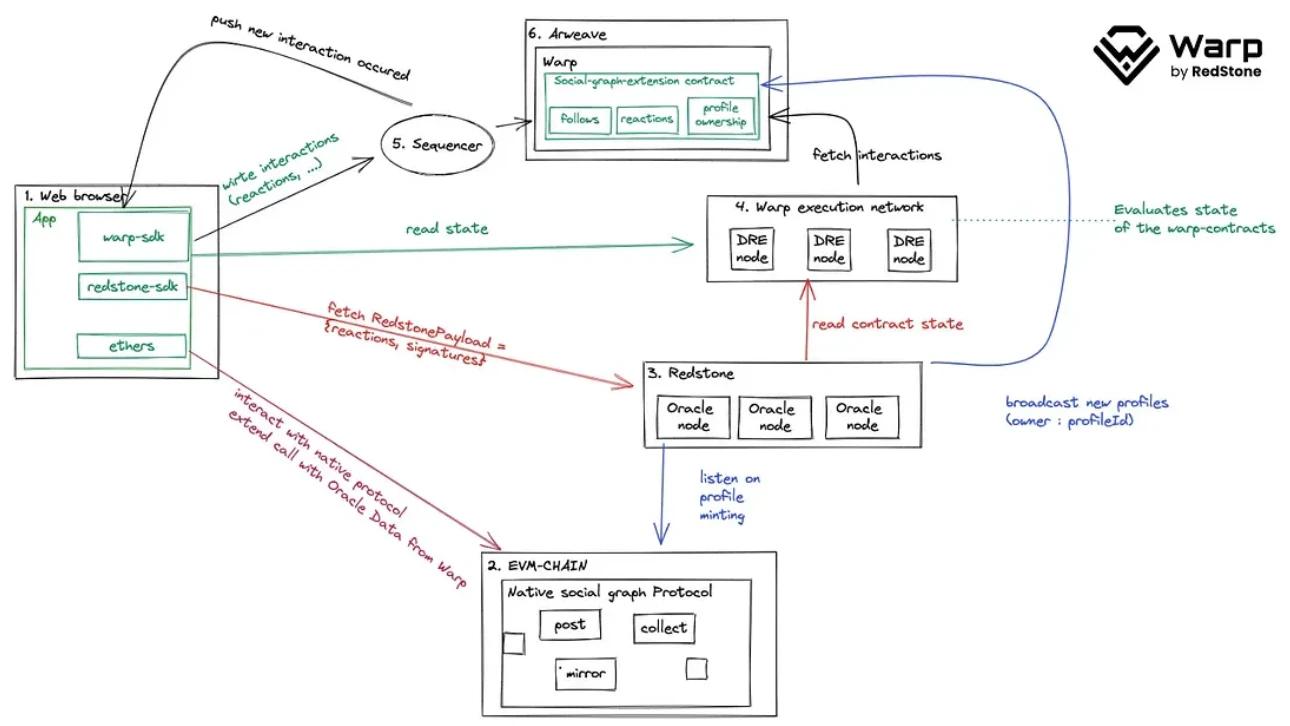

Warp contract(via RedStone)

Warp contracts are the most common SmartWeave implementation, an architecture designed to create a reliable, fast, production-ready smart contract platform/engine on Arweave. In essence, SmartWeave is an ordered array of Arweave transactions that benefits from the lack of a transaction block inclusion fee market on Arweave. These unique properties allow for unlimited transaction data at no additional cost beyond storage costs.

SmartWeave uses a unique approach called lazy evaluation to shift the responsibility for executing smart contract code from network nodes to the users of the smart contract. Essentially, this means that calculations for transaction verification are deferred until needed, reducing the workload on network nodes and enabling transactions to be processed more efficiently. With this approach, users can perform as many calculations as they want without incurring additional fees, providing functionality not possible with other smart contract systems. Obviously, trying to evaluate a contract with thousands of interactions on a users CPU is ultimately futile. To overcome this challenge, an abstraction layer such as Warps DRE was developed. This abstraction layer consists of a distributed network of validators that handle contract calculations, ultimately resulting in significantly faster response times and improved user experience.

Additionally, SmartWeave’s open design enables developers to write logic in any programming language, providing a new alternative to the often rigid Solidity code base. Seamless SmartWeave integration enhances existing social graph protocols built on EVM chains by delegating certain high-cost or high-throughput operations to Warp, leveraging the benefits of both technologies.

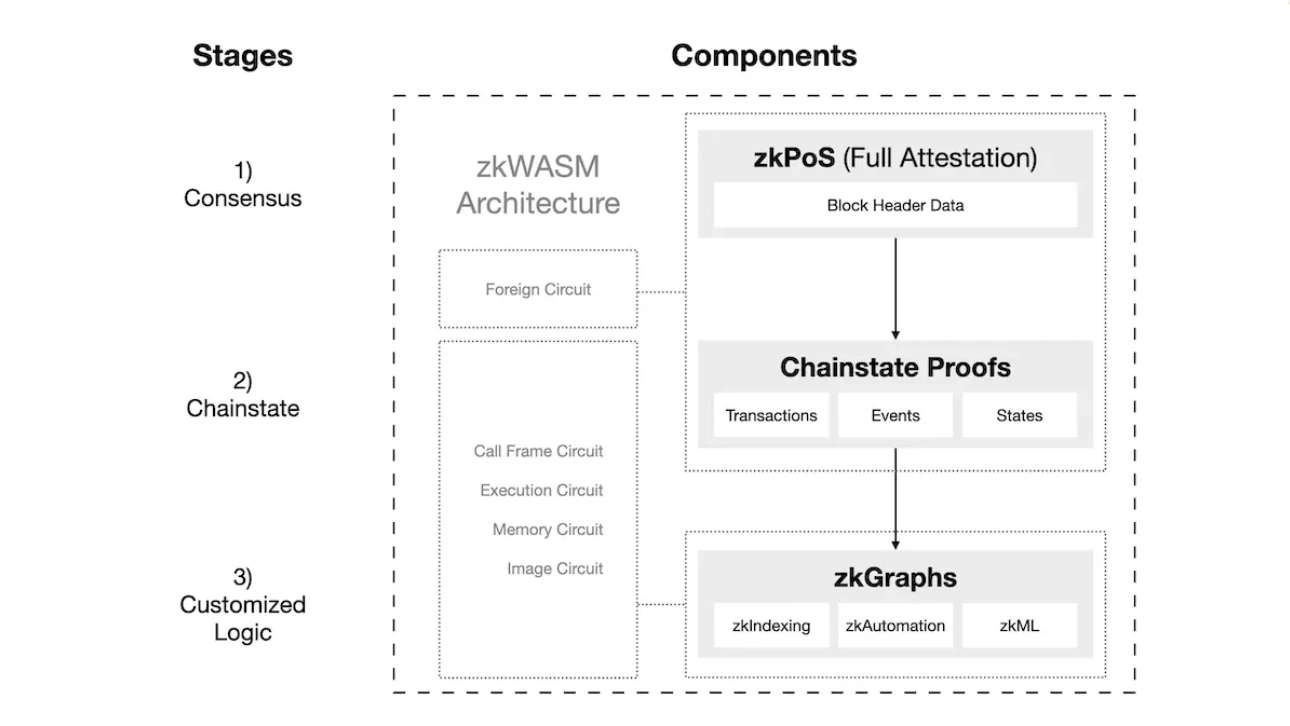

Hyper Oracle

Hyper Oracle is a ZK oracle network designed specifically for blockchain. Currently, the ZK Oracle network only operates on the Ethereum blockchain. It uses zkPoS to retrieve data from each block of the blockchain as a data source while processing the data using a programmable zkGraph running on zkWASM, all in a trustless and secure manner.

Developers can use JavaScript to define custom off-chain computations, deploy these computations to the Hyper Oracle network, and leverage Hyper Oracle Meta Apps to index and automate their smart contracts.

Hyper Oracles indexing and automation Meta Apps are fully customizable and flexible. Any calculation can be defined, and all calculations (even machine learning calculations) will be secured by the generated zero-knowledge proofs.

The Ethereum blockchain is the original on-chain data source for ZK oracles, but any network can be used in the future.

Hyper Oracle ZK Oracle Node consists of two main components: zkPoS and zkWASM.

- zkPoS uses zero-knowledge to prove the consensus of Ethereum and obtain the block header and data root of the Ethereum blockchain. The zero-knowledge proof generation process can be outsourced to a decentralized network of provers. zkPoS acts as the outer loop of zkWASM.

- zkPoS provides the block header and data root to zkWASM. zkWASM uses this data as basic input for running zkGraph.

-zkWASM Run custom data maps or any other calculations defined by zkGraph and generate zero-knowledge proofs of these operations. Operators of ZK Oracle nodes can select the number of zkGraphs they wish to run (from one to all deployed zkGraphs). The zero-knowledge proof generation process can be outsourced to a decentralized network of provers.

The ZK oracle outputs off-chain data, and developers can use this off-chain data through Hyper Oracle Meta Apps (to be introduced in subsequent chapters). The data also comes with zero-knowledge proofs proving its validity and computation.

Other items worth mentioning

There are also projects that can be used as external coprocessors if you decide to go this route. It’s just that these projects overlap in other vertical areas of blockchain infrastructure and are not separately classified as co-processors.

RiscZero: If a dApp uses RiscZero to compute a machine learning task for an agent on the chain and provides the results to a game contract on StarkNet, it will use StarkNet as the main processor and RiscZero as the co-processor.

IronMill: If a dApp runs a zk loop in IronMill but deploys the smart contract on Ethereum, it will use Ethereum as the main processor and IronMill as the co-processor.

Potential use cases for external coprocessors

Governance and voting: Historical on-chain data can help decentralized autonomous organizations (DAOs) record the number of voting rights each member has, which is essential for voting. Without this data, members may not be able to participate in the voting process, which may hinder governance.

Underwriting: Historical on-chain data can help asset managers evaluate their managers’ performance beyond profits. They can see the level of risk they are taking and the types of drawdowns they are experiencing, which helps them make more informed decisions when compensation or potential rewards are reduced.

Decentralized exchanges: Historical price data on the chain can help decentralized exchanges trade based on past trends and patterns, potentially bringing higher profits to users. Additionally, historical trading data helps exchanges improve algorithms and user experience.

Insurance products: Insurance companies can use historical on-chain data to assess risk and set premiums for different types of policies. For example, when setting premiums for DeFi projects, insurance companies may look at past on-chain data.

Note that all of the above use cases are asynchronous because the client dApp needs to call the external co-processors smart contract when triggered in block N. When the coprocessor returns the result of a computation, the result must be accepted or verified in some form at least in the next block (i.e. N+1). In this way, at least the next trigger block is obtained to take advantage of the co-processing results. This model is really like a cloud GPU. It can run your machine learning models well, but you wont be able to happily play fast-paced games on it due to the latency.

Embedded coprocessor

An embedded coprocessor is similar to the GPU on a PC motherboard, located next to the CPU. GPU to CPU communication latency is very small. And the GPU is completely under your control, so you can be pretty sure it hasnt been tampered with. It’s just that getting it to run machine learning as fast as a cloud GPU is expensive.

Still taking Uniswap v4 as an example. Assuming that some ETH and USDC are added to the liquidity pool deployed on Artela during the last 5 minutes of TWAP, if the pool is deployed in the EVM on Artela and the TWAP calculation is done in WASM on Aretla, the pool is basically It uses Artelas EVM as the main processor and Artelas WASM as the co-processor.

Artela

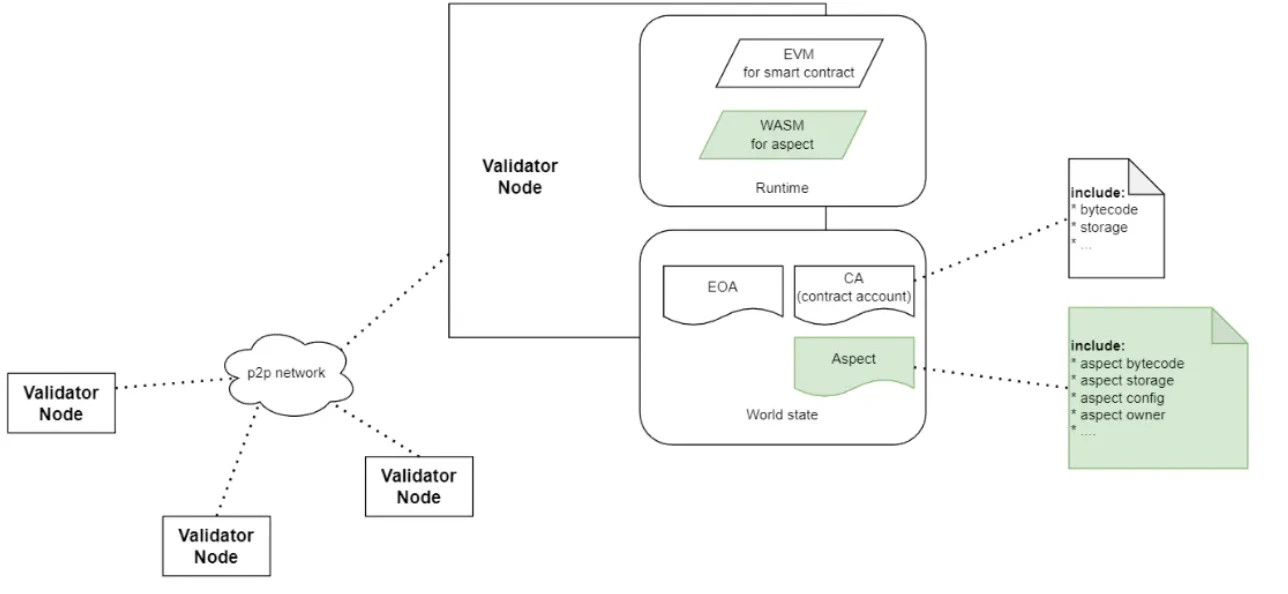

Artela is L1 built using Tendermint BFT. It provides a framework that supports the dynamic expansion of any execution layer to implement on-chain custom functions. Each Artela full node runs two virtual machines simultaneously.

EVM, the main processor that stores and updates smart contract state.

WASM, a coprocessor that stores and updates aspect state.

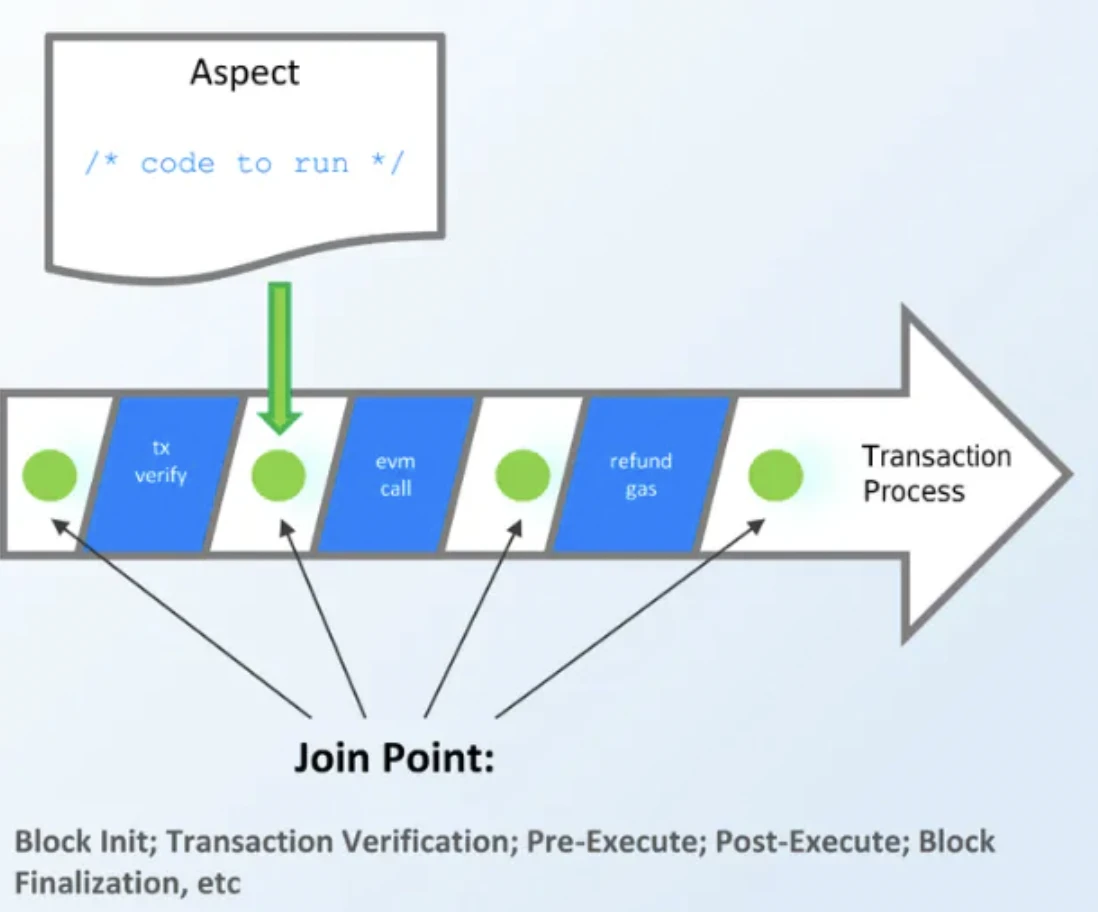

Aspects represent arbitrary computations that developers wish to run without touching the smart contract state. Think of it as a Rust script that provides dApps with custom functionality beyond the native composability of smart contracts.

If this is difficult to understand, you can try to look at it from the following two perspectives:

From the perspective of blockchain architecture

- Aspect is the new execution layer.

- In Artela, the blockchain runs two execution layers simultaneously - one for smart contracts and one for other computations.

- This new execution layer does not introduce new trust assumptions and therefore does not affect the security of the blockchain itself. Both VMs are protected by the same set of nodes running the same consensus.

From an application runtime perspective

- Aspects are programmable modules that work with smart contracts, supporting the addition of custom functions and independent execution.

- It has advantages over a single smart contract in several aspects:

-- Non-intrusive: You can intervene before and after the contract execution without modifying the smart contract code.

-- Synchronous execution: Supports hook logic throughout the entire transaction life cycle, allowing for refined customization.

-- Direct access to global status and base layer configuration, supporting system-level functions.

-- Flexible block space: Provide protocol-guaranteed independent block space for dApps with higher transaction throughput requirements.

-- Compared with static pre-compilation, support dApps to achieve dynamic and modular upgrades at runtime to balance stability and flexibility.

By introducing this embedded coprocessor, Artela has achieved an exciting breakthrough: now, arbitrary extension module Aspects can be executed through the same transactions as smart contracts. Developers can bind their smart contracts to Aspects and have all transactions that call the smart contract handled by Aspects. .

In addition, like smart contracts, Aspects store data on the chain, allowing smart contracts and Aspects to read each others global state.

These two features greatly improve the composability and interoperability between smart contracts and aspects.

Aspect functions:

Compared to smart contracts, the functionality provided by Aspects focuses primarily on pre- and post-trade execution. Aspects do not replace smart contracts, but complement them. Compared to smart contracts, Aspects provide applications with the following unique features:

- Automatically insert reliable transactions into upside-down blocks (e.g. for scheduled tasks).

- Reversal of state data changes caused by transactions (only authorized contract transactions can be reversed).

- Read static environment variables.

- Pass temporary execution state to other aspects downstream.

- Read the temporary execution state passed from the upstream Aspect.

- Dynamic and modular upgradeability.

The difference between aspects and smart contracts:

The difference between aspects and smart contracts is:

- Smart contracts are accounts with code, while aspects are native extensions of the blockchain.

- Aspects can run at different points in the transaction and block lifecycle, while smart contracts only execute at fixed points.

- Smart contracts have access to their own state and limited context of blocks, while aspects can interact with global processing context and system-level APIs.

- Aspects execution environment is designed for near-native speed.

Aspect is just a piece of code logic and has nothing to do with the account, so it cannot:

- Write, modify or delete contract status data.

- Create a new contract.

- Transfer, destroy or hold native tokens.

These aspects make Artela a unique platform that can extend the functionality of smart contracts and provide a more comprehensive and customizable development environment.

*Please note that strictly speaking, the above Aspect is also called a built-in Aspect, which is an embedded co-processor run by the Artela Chain full node. dApps can also deploy their own heterogeneous Aspects, run by external co-processors. These external coprocessors can be executed on an external network or by a subset of nodes in another consensus. It’s more flexible because dApp developers can actually do whatever they want with it, as long as it’s safe and sensible. It is still being explored and specific details have not yet been announced.

Potential use cases for embedded coprocessors

The complex calculations involved in new DeFi projects, such as complex game theory mechanisms, may require the more flexible and iterative on-the-fly computing power of embedded coprocessors.

A more flexible access control mechanism for various dApps. Currently, access control is usually limited to blacklisting or whitelisting based on smart contract permissions. Embedded coprocessors unlock immediate and granular levels of access control.

Certain complex features in Full Chain Games (FOCG). FOCG has long been limited by EVM. It might be simpler if the EVM retained simpler functionality like transferring NFTs and tokens, while other logic and state updates were computed by the coprocessor.

Security Mechanism. dApps can introduce their own active security monitoring and fail-safe mechanisms. For example, a liquidity pool can block withdrawals exceeding 5% every 10 minutes. If the coprocessor detects one of the withdrawals, the smart contract can stop and trigger some alert mechanism, such as injecting emergency liquidity within a certain dynamic price range.

Conclusion

It is inevitable that dApps will become large, bloated, and overly complex, so it is inevitable that co-processors will become more popular. Its just a matter of time and the adoption curve.

Running external coprocessors allows dApps to stay in their comfort zone: no matter which chain they were on previously. However, for new dApp developers looking for a deployable execution environment, embedded coprocessors are like the GPU on a PC. If it calls itself a high-performance PC, it has to have a decent GPU.

Unfortunately, the above projects have not yet been launched on the mainnet. We cant really benchmark and show which project is better suited for which use case. However, one thing is undeniable and that is that technology is on an upward spiral. It may seem like were going in circles, but remember, from the side, history will see that technology really does evolve.

Long live the scalability triangle and long live the coprocessor.